Chapter 6 JointAI: Joint Analysis and Imputation of Incomplete Data in R

This chapter is based on

Nicole S. Erler, Dimitris Rizopoulos and Emmanuel M. E. H. Lesaffre. JointAI: Joint Analysis and Imputation of Incomplete Data in R. (Manuscript in preparation)

Abstract

Missing data occur in many types of studies and typically complicate the analysis. Multiple imputation, either using joint modelling or the more flexible full conditional specification approach, is popular and works well in standard settings. However, in settings involving non-linear associations or interactions, incompatibility of the imputation model with the analysis model is an issue often resulting in bias. Similarly, complex outcomes such as longitudinal or survival outcomes cannot be adequately handled by standard implementations.

In this chapter, we introduce the R package JointAI, which utilizes the Bayesian framework to perform simultaneous analysis and imputation in regression models with incomplete covariates. Using a fully Bayesian joint modelling approach it overcomes the issue of uncongeniality while retaining the attractive flexibility of fully conditional specification multiple imputation by specifying the joint distribution of analysis and imputation models as a sequence of univariate models that can be adapted to the type of variable. JointAI provides functions for Bayesian inference with generalized linear and generalized linear mixed models as well as survival models, that take arguments analogous to their corresponding and well known complete data versions from base R and other packages. Usage and features of JointAI are described and illustrated using various examples and the theoretical background is outlined.

6.1 Introduction

Missing data are a challenge common to the analysis of data from virtually all kinds of studies. Especially when many variables are measured, as in big cohort studies, or when data are obtained retrospectively, e.g., from registries, proportions of missing values up to 50% are not uncommon in some variables.

Multiple imputation, which is often considered the gold standard to handle incomplete data, has its origin in the 1970s and was primarily developed for survey data (Rubin 1987, 2004). One of its first implementations in R (R Core Team 2018) is the package norm (Novo and Schafer 2010), which performs multiple imputation under the joint modelling framework using a multivariate normal distribution (Schafer 1997). Nowadays, multiple imputation using a fully conditional specification (FCS), also called multiple imputation using chained equations (MICE), and its seminal implementation in the R package mice (Van Buuren and Groothuis-Oudshoorn 2011; Van Buuren 2012), is more frequently used.

Datasets have gotten more complex compared to the survey data multiple imputation was developed for. Therefore, more sophisticated methods that can adequately handle the features of modern data and comply with the assumptions made in its analysis are required. Modern studies do not only record univariate outcomes, measured in cross-sectional settings, but outcomes that consist of two or more measurements, such as repeatedly measured or survival outcomes. Furthermore, non-linear effects, introduced by functions of covariates, such as transformations, polynomials or splines, or interactions between variables are considered in the analysis and hence need to be taken into account during imputation.

Standard multiple imputation, either using FCS or a joint modelling approach, e.g., under a multivariate normal distribution, assumes linear associations between all variables. Moreover, FCS requires the outcome to be explicitly specified in each of the linear predictors of the full conditional distributions. In settings where the outcome is more complex than just univariate, this is not straightforward and not generally possible without information loss, leading to misspecified imputation models.

Some extensions of standard multiple imputation have been developed and are implemented in R packages and other software, but the larger part of software for imputation is restricted to standard settings such as cross-sectional survey data. R packages that offer extensions frequently focus on particular settings and researchers need to be familiar with a number of different packages, which often require input and specifications in very different forms. Moreover, most R packages dealing with incomplete data implement multiple imputation, i.e., create multiple imputed datasets, which are then analysed in a second step, followed by pooling of the results.

The R package JointAI (Erler 2019), which is presented in this chapter, follows a different, fully Bayesian approach. It provides a unified framework for both simple and more complex models, using a consistent specification most users will be familiar with from commonly used (base) R functions. By modelling the analysis model of interest jointly with the incomplete covariates, analysis and imputation can be performed simultaneously while assuring compatibility between all sub-models (Erler et al. 2016, 2019). In this joint modelling approach, the added uncertainty due to the missing values is automatically taken into account in the posterior distribution of the parameters of interest, and no pooling of results from repeated analyses is necessary. The joint distribution is specified in a convenient way, using a sequence of conditional distributions that can be specified flexibly according to each type of variable. Since the analysis model of interest defines the first distribution in the sequence, the outcome is included in the joint distribution without the need for it to enter the linear predictor of any of the other models. Moreover, non-linear associations that are part of the analysis model are automatically taken into account for the imputation of missing values. This directly enables our approach to handle complicated models, with complex outcomes and flexible linear predictors.

In the following, we introduce the R package JointAI, which performs joint analysis and imputation of regression models with incomplete covariates under the Missing At Random assumption (Rubin 1976), and explain how data with incomplete covariate information can be analysed and imputed with it. The package is available for download at the Comprehensive R Archive Network (CRAN) under https://CRAN.R-project.org/package=JointAI. Section 6.2 briefly describes the theoretical background. An outline of the general structure of JointAI is given in Section 6.3, followed by an introduction to example datasets that are used throughout this chapter, in Section 6.4. Details about model specification, settings controlling the Markov Chain Monte Carlo sampling, and summary, plotting and other functions that can be applied after fitting the model are given in Sections 6.5 through 6.7. We conclude the paper with an outlook of planned extensions and discuss the limitations that are introduced by the assumptions made in the sequential imputation approach.

6.2 Theoretical Background

Consider the general setting of a regression model where interest lies in a set of parameters \(\boldsymbol\theta\) that describe the association between a univariate outcome \(\mathbf y\) and a set of covariates \(\mathbf X = (\mathbf x_1, \ldots, \mathbf x_p)\). In the Bayesian framework, inference over \(\boldsymbol\theta\) is obtained by estimation of the posterior distribution of \(\boldsymbol\theta\), which is proportional to the product of the likelihood of the data \((\mathbf y, \mathbf X)\) and the prior distribution of \(\boldsymbol\theta\), \[ p(\boldsymbol\theta\mid \mathbf y, \mathbf X) \propto p(\mathbf y, \mathbf X \mid \boldsymbol\theta)\,p(\boldsymbol\theta).\]

When some of the covariates are incomplete, \(\mathbf X\) consists of two parts, the completely observed variables \(\mathbf X_{obs}\) and those variables that are incomplete, \(\mathbf X_{mis}\). If \(\mathbf y\) had missing values (and this missingness was ignorable), the only necessary change in the formulas below would be to replace \(\mathbf y\) by \(\mathbf y_{mis}\). We will, therefore, without loss of generality, consider \(\mathbf y\) to be completely observed.

The likelihood of the complete data, i.e., observed and unobserved, can be factorized in the following convenient way: \[p(\mathbf y, \mathbf X_{obs}, \mathbf X_{mis} \mid \boldsymbol\theta) = p(\mathbf y \mid \mathbf X_{obs}, \mathbf X_{mis}, \boldsymbol\theta_{y\mid x})\, p(\mathbf X_{mis} \mid \mathbf X_{obs}, \boldsymbol\theta_x), \] where the first factor constitutes the analysis model of interest, described by a vector of parameters \(\boldsymbol\theta_{y\mid x}\), and the second factor is the joint distribution of the incomplete variables, i.e., the imputation part of the model, described by parameters \(\boldsymbol\theta_x\), and \(\boldsymbol\theta = (\boldsymbol\theta_{y\mid x}^\top, \boldsymbol\theta_x^\top)^\top\).

Explicitly specifying the joint distribution of all data is one of the major advantages of the Bayesian approach, since this facilitates the use of all available information of the outcome in the imputation of the incomplete covariates (Erler et al. 2016), which becomes especially relevant for more complex outcomes such as repeatedly measured variables (see Section 6.2.2).

In complex models, the posterior distribution can usually not be analytically derived but Markov Chain Monte Carlo (MCMC) methods are used to obtain samples from the posterior distribution. The MCMC sampling in JointAI is done using Gibbs sampling, which iteratively samples from the full conditional distributions of the unknown parameters and missing values.

In the following sections, we describe each of the three parts of the model, the analysis model, the imputation part and the prior distributions, in detail.

6.2.1 Analysis Model

The analysis model of interest is described by the probability density function \(p(\mathbf y \mid \mathbf X, \boldsymbol\theta_{y\mid x})\). The R package JointAI can currently handle analysis models that are either generalized linear regression models (GLM), (generalized) linear mixed models (GLMM), cumulative logit (mixed) models, parametric (Weibull) survival models or Cox proportional hazards models.

For a GLM, the probability density function is chosen from the exponential family and the model has the linear predictor \[g(E(y_i\mid \mathbf X, \boldsymbol\theta_{y\mid x})) = \mathbf x_i^\top\boldsymbol\beta,\] where \(g(\cdot)\) is a link function, \(y_i\) the value of the outcome variable for subject \(i\), and \(\mathbf x_i\) is the row of \(\mathbf X\) that contains the covariate information for \(i\).

For a GLMM the linear predictor is of the form \[g(E(y_{ij}\mid \mathbf X, \mathbf b_i, \boldsymbol\theta_{y\mid x})) = \mathbf x_{ij}^\top\boldsymbol\beta + z_{ij}^\top\mathbf b_i,\] where \(y_{ij}\) is the \(j\)-th outcome of subject \(i\), \(\mathbf x_{ij}\) is the corresponding vector of covariate values, \(\mathbf b_i\) a vector of random effects pertaining to subject \(i\), and \(\mathbf z_{ij}\) the row of the design matrix of the random effects, \(\mathbf Z\), that corresponds to the \(j\)-th measurement of subject \(i\). \(\mathbf Z\) typically contains a subset of the variables in \(\mathbf X\), and \(\mathbf b_i\) follows a normal distribution with mean zero and covariance matrix \(\mathbf D\).

In both cases the parameter vector \(\boldsymbol\theta_{y\mid x}\) contains the regression coefficients \(\boldsymbol\beta\), and potentially additional variance parameters (e.g., for linear (mixed) models), for which prior distributions will be specified in Section 6.2.3.

Cumulative logit mixed models are of the form \[\begin{eqnarray*} y_{ij} &\sim& \text{Multinom}(\pi_{ij,1}, \ldots, \pi_{ij,K}),\\[2ex] \pi_{ij,1} &=& P(y_{ij} \leq 1),\\ \pi_{ij,k} &=& P(y_{ij} \leq k) - P(y_{ij} \leq k-1), \quad k \in 2, \ldots, K-1,\\ \pi_{ij,K} &=& 1 - \sum_{k = 1}^{K-1}\pi_{ij,k},\\[2ex] \text{logit}(P(y_{ij} \leq k)) &=& \gamma_k + \eta_{ij}, \quad k \in 1,\ldots,K,\\ \eta_{ij} &=& x_{ij}^\top\boldsymbol\beta + z_{ij}^\top\mathbf b_i,\\[2ex] \gamma_1,\delta_1,\ldots,\delta_{K-1} &\overset{iid}{\sim}& N(\mu_\gamma, \sigma_\gamma^2),\\ \gamma_k &\sim& \gamma_{k-1} + \exp(\delta_{k-1}),\quad k = 2,\ldots,K, \end{eqnarray*}\] where \(\pi_{ij,k} = P(y_{ij} = k)\) and \(\text{logit}(x) = \log\left(\frac{x}{1-x}\right)\). A cumulative logit regression model for a univariate outcome \(y_i\) can be obtained by dropping the index \(j\) and omitting \(z_{ij}^\top\mathbf b_i\). In cumulative logit (mixed) models, the design matrix \(\mathbf X\) does not contain an intercept, since outcome category specific intercepts \(\gamma_1,\ldots, \gamma_K\) are specified. Here, the parameter vector \(\boldsymbol \theta_{y\mid x}\) includes the regression coefficients \(\boldsymbol\beta\), and the first intercept \(\gamma_1\) and increments \(\delta_1, \ldots, \delta_{K-1}\).

Survival data are typically characterized by the observed event or censoring times, \(T_i\), and the event indicator, \(D_i\), which is equal to one if the event was observed and zero otherwise. JointAI provides two types of models to analyse right censored survival data, a parametric model assuming a Weibull distribution for the true (but partially unobserved) survival times \(T^*\), and a semi-parametric Cox proportional hazards model.

The parametric survival model is implemented as \[\begin{eqnarray*} T_i^* &\sim& \text{Weibull}(1, r_i, s),\\ D_i &\sim& \unicode{x1D7D9}(T_i^* \geq C_i),\\ \log(r_j) &=& - \mathbf x_i^\top\boldsymbol\beta,\\ s &\sim& \text{Exp}(0.01), \end{eqnarray*}\] where \(\unicode{x1D7D9}\;\) is the indicator function which is one if \(T_i^* \geq C_i\), and zero otherwise.

For the Cox proportional hazards model, following Lunn et al. (2012), a counting process representation is implemented, where the baseline hazard is assumed to be piecewise constant and changes only at observed event times. Let \(\{N_i(t), t \geq 0\}\) be an event counting process for individual \(i\), where \(N_i(t) = 0\) until the individual experiences an event and increases by one at the time of the event. \(dN_i(t)\) then denotes the change in \(N_i(t)\) in the interval \([t, t+dt)\), where \(dt\) is the length of that interval, and can be modelled as a Poisson random variable with time-varying intensity \(\lambda_i(t)\). This intensity depends on covariates \(\mathbf x_i\), the baseline hazard \(\lambda_0(t)\), and the risk set indicator \(R_i(t)\), which is equal to one if, at time \(t\), subject \(i\) is at risk for an event, and zero otherwise. \[\begin{eqnarray*} dN(t)_i &\sim& \text{Poisson}(\lambda_i(t)),\quad t \in 0,\ldots, T\\ \lambda_i(t) &=& \exp(\mathbf x_i^\top\boldsymbol\beta) \; \lambda_0(t) \; R_i(t)\\ \lambda_0(t) &\sim& \text{Gamma}(c\lambda_0(t)^*, c) \end{eqnarray*}\] where \(\lambda_0(t)^*\) is a prior guess of the failure rate at time \(t\), and \(c\) represents the confidence about that prior guess.

6.2.2 Imputation Part

A convenient way to specify the joint distribution of the incomplete covariates \(\mathbf X_{mis} = (\mathbf x_{mis_1}, \ldots, \mathbf x_{mis_q})\) is to use a sequence of conditional univariate distributions (Erler et al. 2016; Ibrahim, Chen, and Lipsitz 2002) \(\boldsymbol\theta_{x} = (\boldsymbol\theta_{x_1}^\top, \ldots, \boldsymbol\theta_{x_q}^\top)^\top\).

Each of the conditional distributions is a member of the exponential family, extended with distributions for ordinal categorical variables, and chosen according to the type of the respective variable. Its linear predictor is \[ g_\ell\left\{E\left(x_{i,mis_\ell} \mid \mathbf x_{i,obs}, \mathbf x_{i, mis_{<\ell}}, \boldsymbol\theta_{x_\ell}\right) \right\} = (\mathbf x_{i, obs}^\top, x_{i, mis_1}, \ldots, x_{i, mis_{\ell-1}}) \boldsymbol\alpha_{\ell}, \] for \(\ell=1,\ldots,q\), where \(\mathbf x_{i,mis_{<\ell}} = (x_{i,mis_1}, \ldots, x_{i,mis_{\ell-1}})^\top\).

Factorization of the joint distribution of the covariates in such a sequence yields a straightforward specification of the joint distribution, even when the covariates are of mixed type.

Missing values in the covariates are sampled from their full conditional distributions that can be derived from the full joint distribution of outcome and covariates.

When, for instance, the analysis model is a GLM, the full conditional distribution of an incomplete covariate \(x_{i, mis_{\ell}}\) can be written as \[\begin{eqnarray} p(x_{i, mis_{\ell}} \mid \mathbf y_i, \mathbf x_{i,obs}, \mathbf x_{i,mis_{-\ell}}, \boldsymbol\theta) & \propto& p \left(y_i \mid \mathbf x_{i, obs}, \mathbf x_{i, mis}, \boldsymbol\theta_{y\mid x} \right)\, p(\mathbf x_{i, mis}\mid \mathbf x_{i, obs}, \boldsymbol\theta_{x})\\ & & p(\boldsymbol\theta_{y\mid x})\, p(\boldsymbol\theta_{x})\\\nonumber &\propto& p\left(y_i \mid \mathbf x_{i, obs}, \mathbf x_{i, mis}, \boldsymbol\theta_{y\mid x} \right)\, p(x_{i, mis_\ell} \mid \mathbf x_{i, obs}, \mathbf x_{i, mis_{<\ell}}, \boldsymbol\theta_{x_\ell})\\ & &\left\{\prod_{k=\ell+1}^q p(x_{i,mis_k}\mid \mathbf x_{i, obs}, \mathbf x_{i, mis_{<k}}, \boldsymbol\theta_{x_k}) \right\}\, p(\boldsymbol\theta_{y\mid x}) p(\boldsymbol\theta_{x_\ell})\\ & & \prod_{k=\ell+1}^p \, p(\boldsymbol\theta_{x_k}),\tag{6.2} \end{eqnarray}\] where \(\boldsymbol\theta_{x_{\ell}}\) is the vector of parameters describing the model for the \(\ell\)-th covariate, and contains the vector of regression coefficients and potentially additional (variance) parameters. The product of distributions enclosed by curly brackets represents the distributions of those covariates that have \(x_{mis_\ell}\) as a predictive variable in the specification of the sequence in (6.1).

Even though (6.2) describes the actual imputation model, i.e., the distribution the imputed values for \(x_{i, mis_{\ell}}\) are sampled from, we will use the term “imputation model” for the conditional distribution of \(x_{i, mis_{\ell}}\) from (6.1), since the latter is the distribution that is explicitly specified by the user and, hence, of more relevance when using JointAI.

Imputation with Longitudinal Outcomes

Factorizing the joint distribution into the analysis model and imputation part allows a straightforward extension to settings with more complex outcomes, such as repeatedly measured outcomes. In the case where the analysis model is a GLMM, the conditional distribution of the outcome in (6.2), \(p(y_i \mid \mathbf x_{i, obs}, \mathbf x_{i, mis}, \boldsymbol\theta_{y\mid x})\), has to be replaced by \(\mathbf y\) does not appear in any of the other terms in (6.2), and (6.3) can be chosen to be a model that is appropriate for the outcome at hand, the thereby specified full conditional distribution of \(x_{i, mis_\ell}\) allows us to draw valid imputations that use all available information on the outcome.

This is an important difference to standard FCS, where the full conditional distributions used to impute missing values are specified directly, usually as regression models, and require the outcome to be explicitly included in the linear predictor of the imputation model. In settings with complex outcomes, it is not clear how this should be done, and simplifications may lead to biased results (Erler et al. 2016). The joint model specification utilized in JointAI overcomes this difficulty.

When some of the covariates are time-varying, it is convenient to specify models for these variables at the beginning of the sequence of covariate models, so that models for longitudinal variables have other longitudinal and baseline covariates in their linear predictor, but longitudinal covariates do not enter the predictors of baseline covariates.

Note that whenever there are incomplete baseline covariates it is necessary to specify models for all longitudinal variables, even completely observed ones, while models for completely observed baseline covariates can be omitted. This becomes clear when we extend the factorized joint distribution from above with completely and incompletely observed covariates \(s_{obs}\) and \(s_{mis}\): \[\begin{multline*} p(y_{ij} \mid \mathbf s_{ij, obs}, \mathbf s_{ij, mis}, \mathbf x_{i, obs}, \mathbf x_{i, mis}, \boldsymbol\theta_{y\mid x})\, p(\mathbf s_{ij, mis}\mid \mathbf s_{ij, obs}, \mathbf x_{i, obs}, \mathbf x_{i, mis}, \boldsymbol\theta_{s_{mis}})\\ p(\mathbf s_{ij, obs}\mid \mathbf x_{i, obs}, \mathbf x_{i, mis}, \boldsymbol\theta_{s_{obs}})\, p(\mathbf x_{i, mis}\mid \mathbf x_{i, obs}, \boldsymbol\theta_{x_{mis}})\, p(\mathbf x_{i, obs} \mid \boldsymbol\theta_{x_{obs}})\\ p(\boldsymbol\theta_{y\mid x})\, p(\boldsymbol\theta_{s_{mis}}) \, p(\boldsymbol\theta_{s_{obs}})\, p(\boldsymbol\theta_{x_{mis}}) \, p(\boldsymbol\theta_{x_{obs}}) \end{multline*}\] Given that the parameter vectors \(\boldsymbol\theta_{x_{obs}}\), \(\boldsymbol\theta_{x_{mis}}\), \(\boldsymbol\theta_{s_{obs}}\) and \(\boldsymbol\theta_{s_{mis}}\) are a priori independent, and \(p(\mathbf x_{i, obs} \mid \boldsymbol\theta_{x_{obs}})\) is independent of both \(\mathbf x_{i,mis}\) and \(\mathbf s_{ij,mis}\), it can be excluded from the model.

Since \(p(\mathbf s_{ij, obs}\mid \mathbf x_{i, obs}, \mathbf x_{i, mis}, \boldsymbol\theta_{s_{obs}})\), however, has \(\mathbf x_{i, mis}\) in its linear predictor and will, hence, be part of the full conditional distribution of \(\mathbf x_{i, mis}\), it cannot be omitted from the model specification.

Non-linear Associations and Interactions

Other settings in which the fully Bayesian approach employed in JointAI has an advantage over standard FCS are settings with interaction terms that involve incomplete covariates, or when the association of the outcome with an incomplete covariate is non-linear. In standard FCS such settings lead to incompatible imputation models (White, Royston, and Wood 2011; Bartlett et al. 2015). This becomes clear when considering the following simple example where the analysis model of interest is the linear regression \(y_i = \beta_0 + \beta_1 x_i + \beta_2 x_i^2 + \varepsilon_i\) and \(x_i\) is imputed using \(x_i = \alpha_0 + \alpha_1 y_i + \tilde\varepsilon_i\). While the analysis model assumes a quadratic relationship, the imputation model assumes a linear association between \(\mathbf x\) and \(\mathbf y\) and a joint distribution with these imputation and analysis models as its full conditional distributions does not exist. Because, in JointAI, the analysis model is a factor in the full conditional distribution that is used to impute \(x_i\), the non-linear association is taken into account. Furthermore, since it is the joint distribution that is specified, and the full conditionals are derived from it, the joint distribution is guaranteed to exist.

6.2.3 Prior Distributions

Prior distributions have to be specified for all (hyper)parameters. A common prior choice for the regression coefficients is the normal distribution with mean zero and large variance. In JointAI variance parameters in models for normally distributed variables are specified as inverse-gamma distributions.

The covariance matrix of the random effects in a mixed model, \(\mathbf D\), is assumed to follow an inverse-Wishart distribution where the degrees of freedom are chosen to be equal to the dimension of the random effects and the scale matrix is diagonal. Since the magnitude of the diagonal elements relates to the variance of the random effects, the choice of suitable values depends on the scale of the variable the random effect is associated with. Therefore, JointAI uses independent gamma hyperpriors for each of the diagonal elements. More details about the default hyperparameters and how to change them are given in Section 6.5.8 and Appendix 6.A.

6.3 Package Structure

The package JointAI has seven main functions, lm_imp(),

glm_imp(), clm_imp(), lme_imp(), glme_imp(), clmm_imp(), survreg_imp()

and coxph_imp(),

that perform regression of continuous and categorical, univariate or multi-level

data as well as right censored survival data.

Model specification is similar to that of standard regression

models in R and is described in detail in Section 6.5.

Based on the specified model formula and other arguments that are provided by the user, JointAI does some pre-processing of the data. It checks which variables are incomplete and/or time-varying, and identifies their measurement level in order to specify appropriate (imputation) models. Interactions and functional forms of variables are detected in the model formula and corresponding design matrices for the various parts of the model are created.

MCMC sampling is performed by the program JAGS (Plummer 2003). The JAGS model, data list, containing all necessary parts of the data, and user-specified settings for the MCMC sampling (further described in Section 6.6) are passed to JAGS via the R package rjags (Plummer 2018).

The above named main functions, from here on abbreviated as *_imp(),

all return an object of class JointAI.

Summary and plotting methods for JointAI objects, as well as functions

to evaluate convergence and precision of the MCMC samples, to predict from

JointAI objects and to export imputed values are discussed in Section 6.7.

Currently, the package works under the assumption of a Missing At Random (MAR) missingness process (Rubin 1976, 1987). When this assumption holds, it is valid to exclude cases with missing values in the outcome in Bayesian inference. Hence, our focus here is on missing covariate values. Nevertheless, JointAI can handle missing values in the outcome; they are implicitly imputed using the specified analysis model.

6.4 Example Data

To illustrate the functionality of JointAI we use three

datasets that are part of this package or the package survival (Therneau 2015; Terry M. Therneau and Patricia M. Grambsch 2000).

The first dataset, the NHANES data, contains data from a

cross-sectional cohort study,

whereas the second dataset (simLong) is a simulated dataset based on a

longitudinal cohort study in toddlers.

The third dataset (lung) contains data on survival of patients with advanced lung cancer.

6.4.1 The NHANES Data

The NHANES data is a subset of observations from the 2011 – 2012 wave of

the National Health and Nutrition Examination Survey (National Center for Health Statistics (NCHS) 2011) and

contains information on 186 men and women

between 20 and 80 years of age.

The variables contained in this dataset are

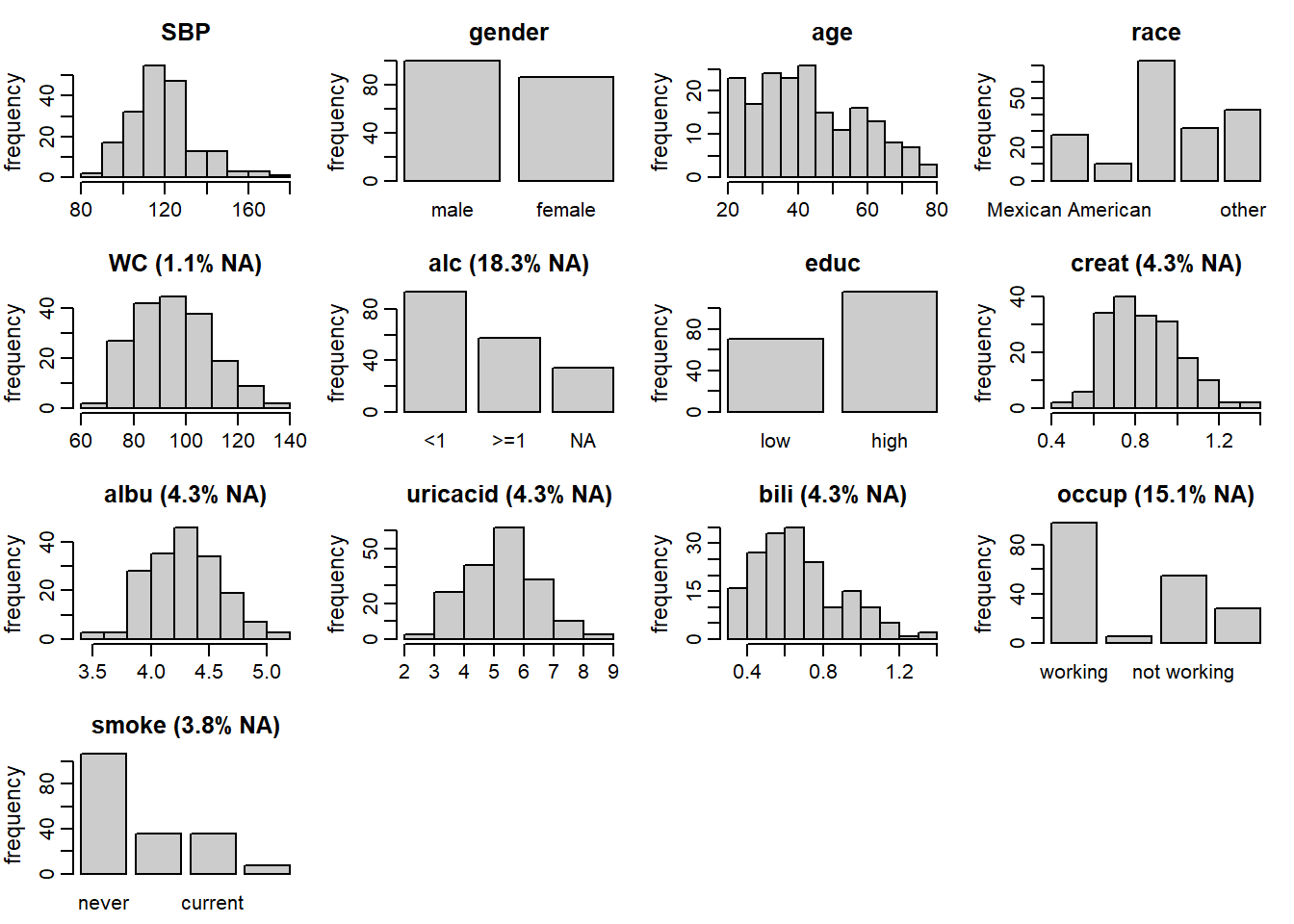

SBP: systolic blood pressure in mmHg; completegender:malevsfemale; completeage: in years; completerace: 5 unordered categories; completeWC: waist circumference in cm; 1.1% missingalc: alcohol consumption;<1drink per week vs>= 1drink per week; 18.3% missingeduc: educational level;lowvshigh; completecreat: creatinine concentration in mg/dL; 4.5% missingalbu: albumin concentration in g/dL; 4.3% missinguricacid: uric acid concentration in mg/dL; 4.3% missingbili: bilirubin concentration in mg/dL; 4.3% missingoccup: occupational status; 3 unordered categories; 15.1% missingsmoke: smoking status; 3 ordered categories; 3.8% missing

Figure 6.1: Distribution of the variables in the NHANES data (with the

percentage of missing values given for incomplete variables).

Figure 6.1 shows the distributions of all variables

in the NHANES data, together with the proportion of missing

values for incomplete variables, and can be obtained with the function plot_all().

Arguments fill and border allow colours to change,

the number of rows and columns can be adapted using nrow and/or ncol, and

additional arguments can be passed to hist() and barplot() via "...".

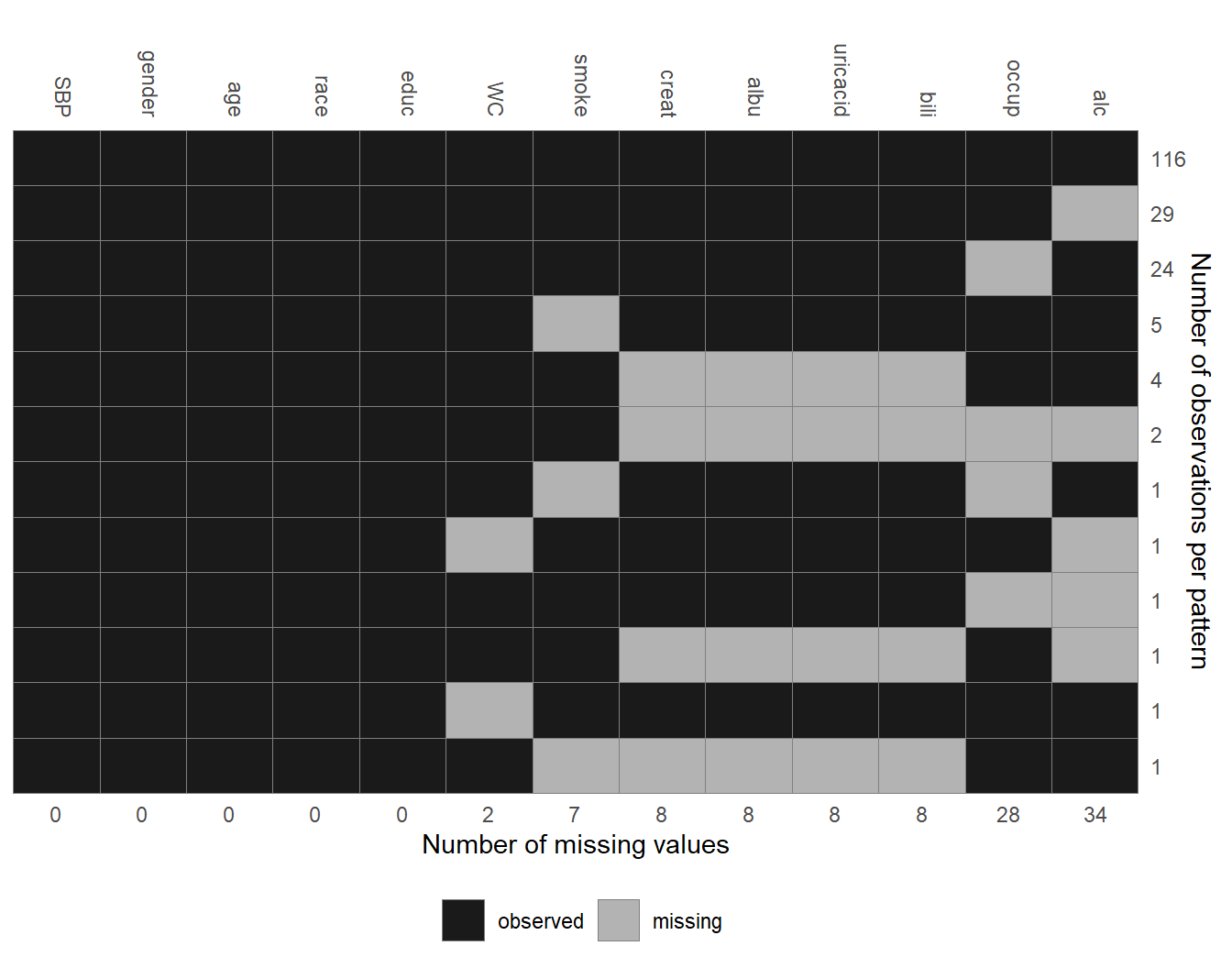

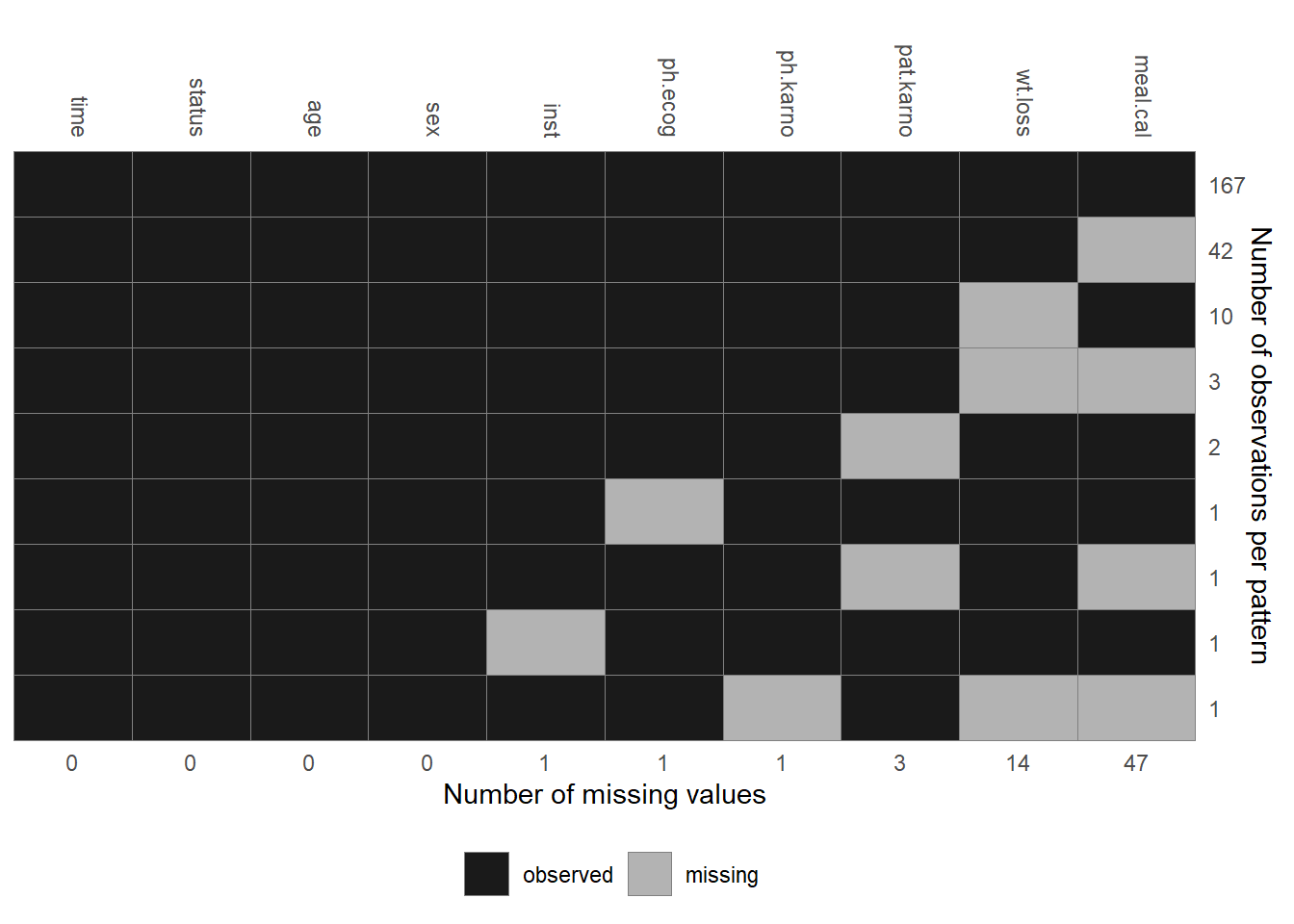

Figure 6.2: Missing data pattern of the NHANES data.

The pattern of missing values in the NHANES data is shown in Figure 6.2.

Each row represents a pattern of missing values, where observed (missing)

values are depicted with dark (light) colour. The frequency with which each of

the patterns is observed is given on the right margin, the number of missing

values in each variable is given underneath the plot. Rows and columns are

ordered by the number of cases per pattern (decreasing) and the number of missing values

(increasing). The first row, for instance, shows that there are 116 complete cases,

the second row that there are 29 cases for which only alc is missing.

Furthermore, it is apparent that creat, albu, uricacid and bili are

always missing together.

Since these variables are all measured in serum this is not surprising.

The plot of the missing data pattern can be obtained with md_pattern().

Again, arguments color and border allow us to change

colours, and arguments such as legend.position, print_xaxis and print_yaxis

permit further customization.

A matrix representation of the missing data pattern can be obtained by setting

pattern = TRUE. There, missing and observed values

are represented with a "0" and "1" respectively.

6.4.2 The simLong Data

The simLong data is a simulated dataset mimicking a longitudinal cohort study

of 200 mother-child pairs. It contains the following

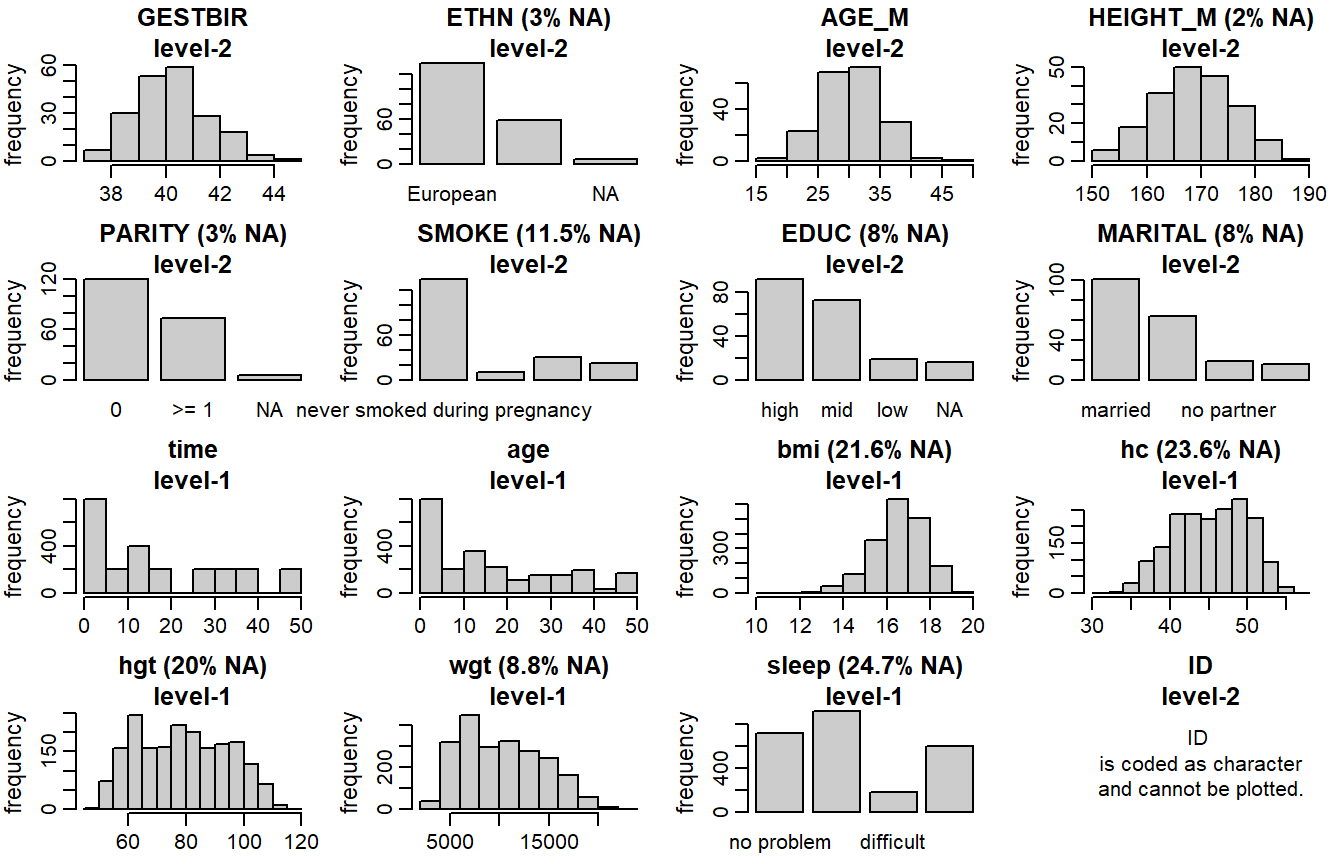

baseline (i.e., not time-varying) covariates

GESTBIR: gestational age at birth in weeks; completeETHN: ethnicity;Europeanvsother; 2.8% missingAGE_M: age of the mother at intake; completeHEIGHT_M: height of the mother in cm; 2.0% missingPARITY: number of times the mother has given birth;0vs>=1; 2.4% missingSMOKE: smoking status of the mother during pregnancy; 3 ordered categories; 12.2% missingEDUC: educational level of the mother; 3 ordered categories; 7.8% missingMARITAL: marital status; 3 unordered categories; 7.0% missingID: subject identifier

and seven longitudinal variables:

time: measurement occasion/visit (by design children should have been measured at 1, 2, 3, 4, 7, 11, 15, 20, 26, 32, 40 and 50 months of age); completeage: child’s age at measurement time in monthshgt: child’s height in cm; 20.0% missingwgt: child’s weight in gram; 8.8% missingbmi: child’s BMI (body mass index) in kg/m2; 21.6% missinghc: child’s head circumference in cm; 23.6% missingsleep: child’s sleeping behaviour; 3 ordered categories; 24.7% missing

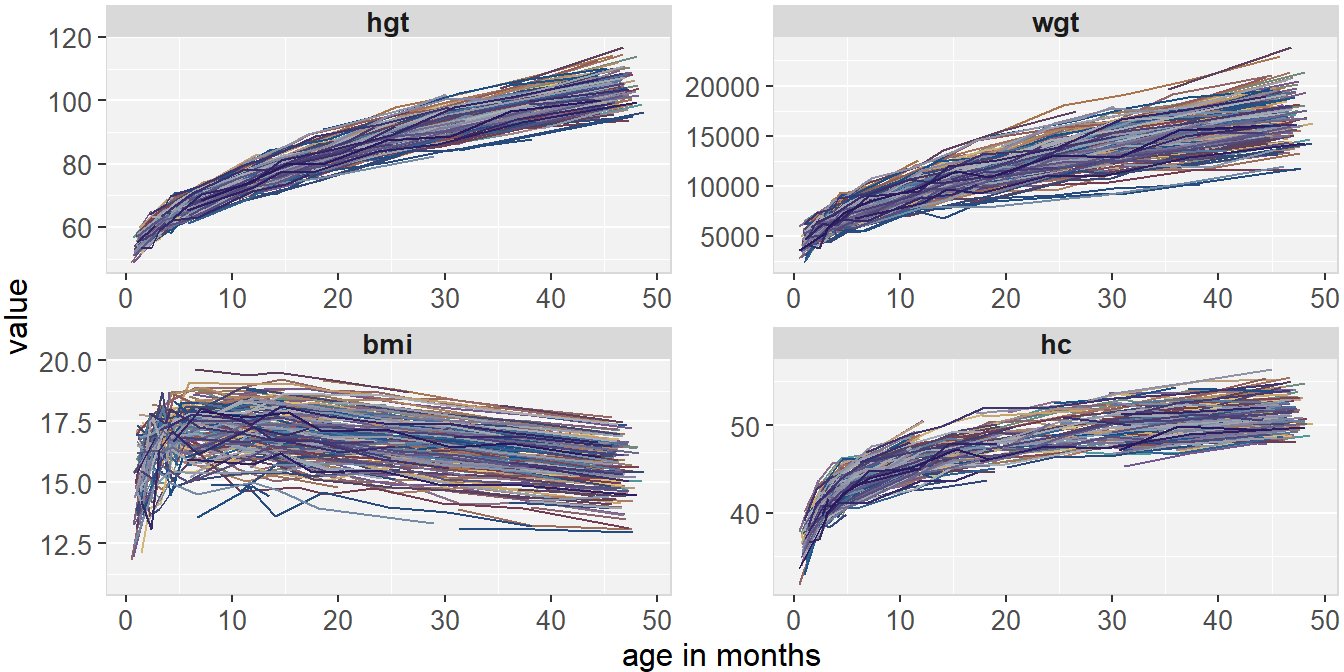

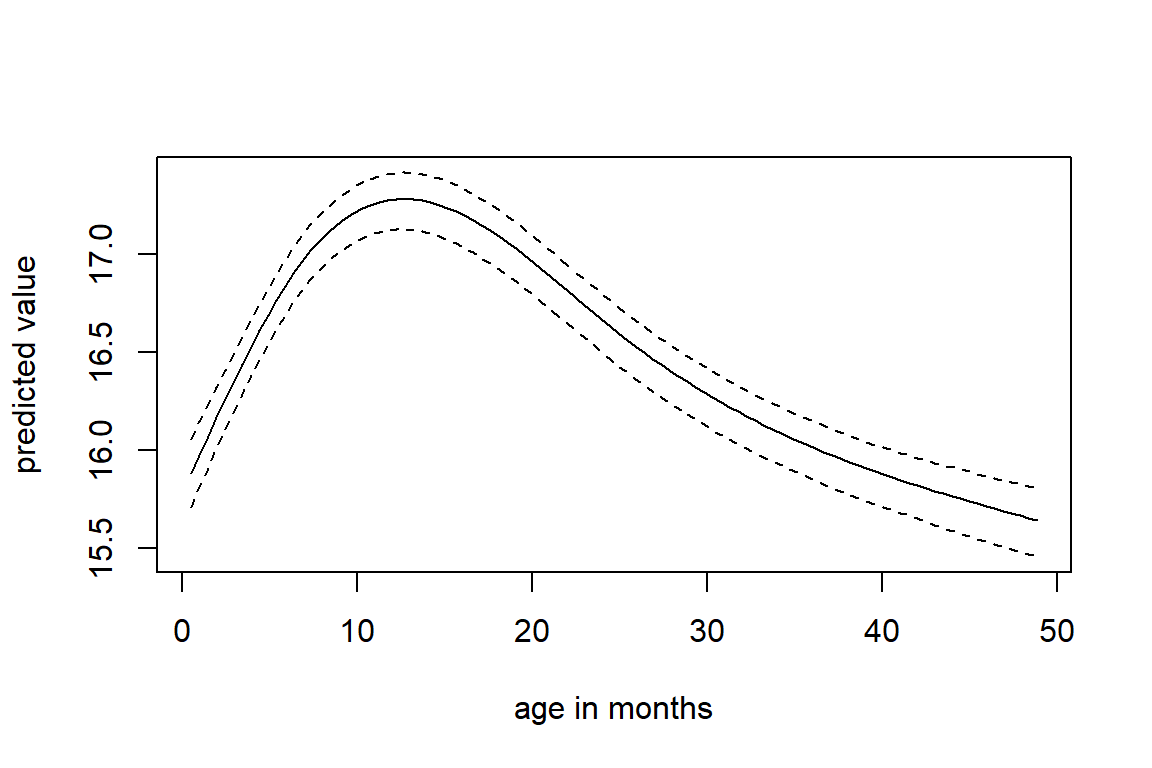

Figure 6.3 shows the longitudinal profiles of

hgt, wgt, bmi and hc over age.

All four variables have a non-linear pattern over time.

Distributions of all variables in the simLong data are displayed in

Figure 6.4. Here, arguments use_level and idvar

of the function plot_all() are used to display baseline (level-2) covariates

on the subject level instead of the observation level:

Figure 6.3: Trajectories of height, weight, BMI and head circumference in the simLong data.

Figure 6.4: Distribution of the variables in the simLong data (with

percentage of missing values given for incomplete variables).

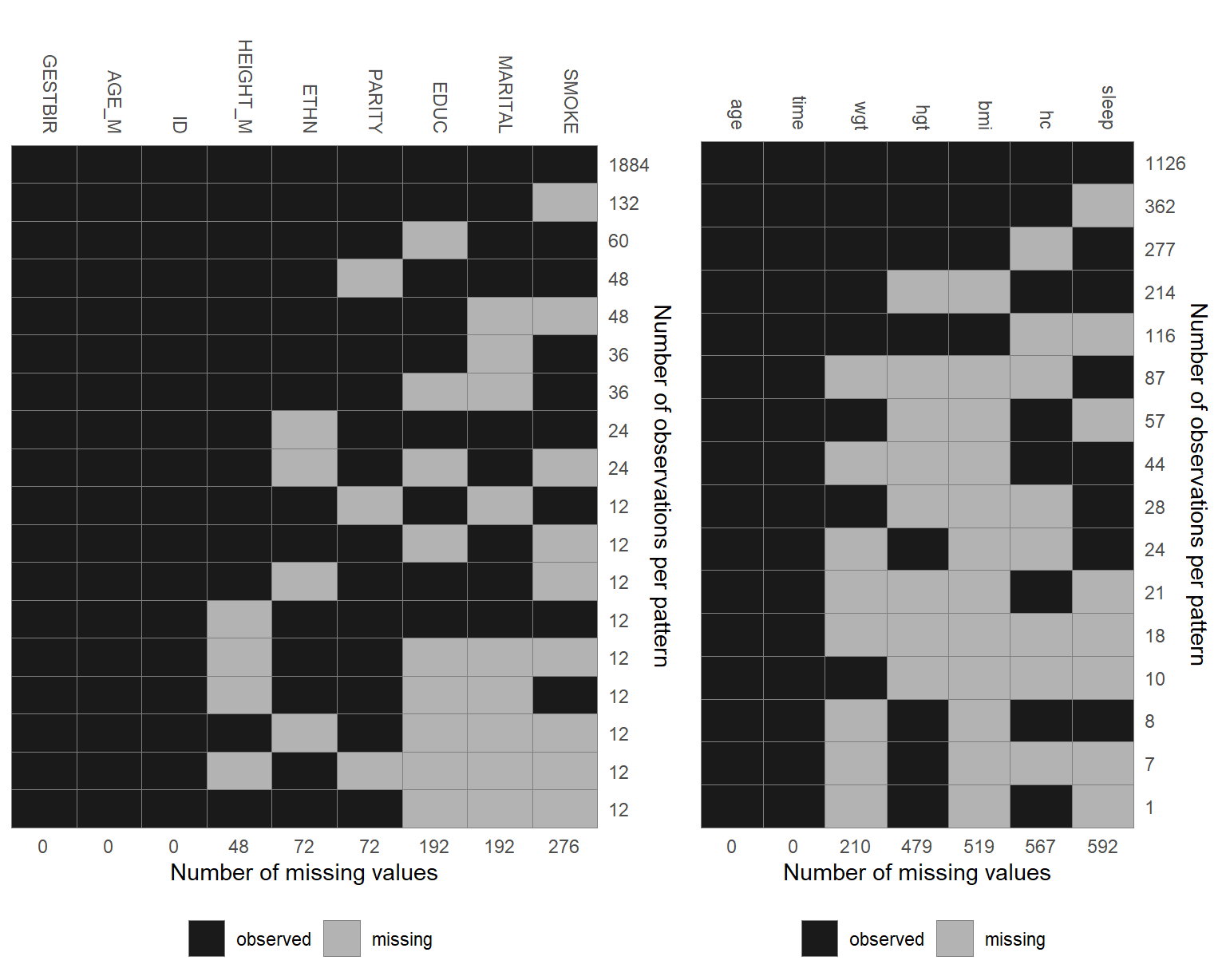

The missing data pattern of the simLong data is shown in Figure 6.5.

For readability, it is plotted separately for baseline (left) and longitudinal

(right) covariates.

Figure 6.5: Missing data pattern.

6.4.3 The lung Data

For demonstration of the use of JointAI for the analysis of survival data,

we use the dataset lung that is included in

the R package survival. It contains data of 228 patients with

advanced lung cancer and includes the following variables:

inst: institution code; completetime: survival time in days; completestatus: event indicator (1: censored,2: dead); completeage: patient’s age in years; completesex: male (1) vs female (2); completeph.ecog: ECOG performance score (describes how the disease impacts the patient’s daily life); scale from0(no impact) to5(dead); 0.4% missingph.karno: Karnofsky performance score as rated by physician (describes the degree of a patient’s impairment by the disease); scale from0(dead) to100(no impairment); 0.4% missingpat.karno: Karnofsky performance score as rated by patient; 1.3% missingmeal.cal: kilocalories consumed at meals; 20.6% missingwt.loss: weight loss over the last six months in kg; 6.1% missing

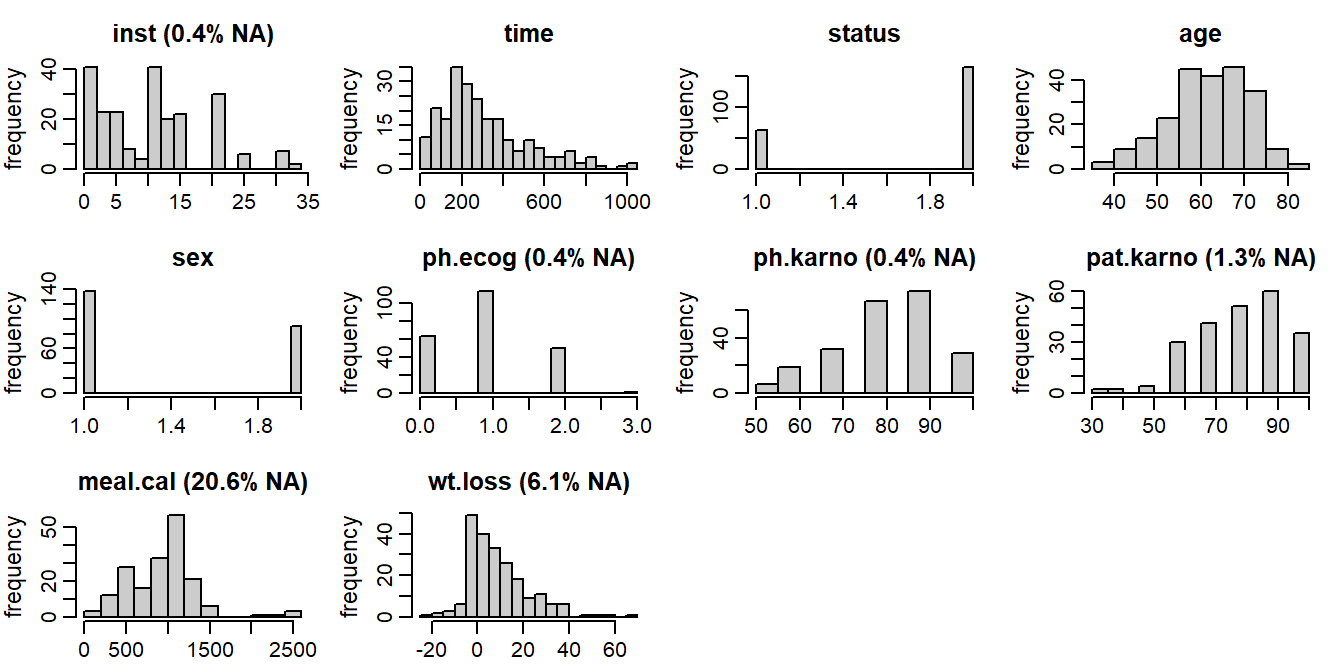

The distribution of the observed values and the missing data pattern of the lung

data are shown in Figures 6.6 and 6.7.

Figure 6.6: Distribution of the variables in the lung data (with

percentage of missing values given for incomplete variables).

Figure 6.7: Missing data pattern of the lung data.

6.5 Model Specification

The main arguments of the functions

lm_imp(formula, data,

n.chains = 3, n.adapt = 100, n.iter = 0, thin = 1, ...)

glm_imp(formula, family, data,

n.chains = 3, n.adapt = 100, n.iter = 0, thin = 1, ...)

clm_imp(formula, data,

n.chains = 3, n.adapt = 100, n.iter = 0, thin = 1, ...)

lme_imp(fixed, data, random,

n.chains = 3, n.adapt = 100, n.iter = 0, thin = 1, ...)

glme_imp(fixed, data, random, family,

n.chains = 3, n.adapt = 100, n.iter = 0, thin = 1, ...)

clmm_imp(fixed, data, random,

n.chains = 3, n.adapt = 100, n.iter = 0, thin = 1, ...)

survreg_imp(formula, data,

n.chains = 3, n.adapt = 100, n.iter = 0, thin = 1, ...)

coxph_imp(formula, data,

n.chains = 3, n.adapt = 100, n.iter = 0, thin = 1, ...)i.e., formula, data, family, fixed, and random, are used

analogously to the specification in the standard complete data functions

lm() and glm() from package stats, lme() from package nlme (Pinheiro et al. 2018),

and survreg() and coxph() from package survival.

For the description of the remaining arguments see Section 6.6.

The arguments formula (in lm_imp(), glm_imp() and clm_imp()) and fixed

(in lme_imp(), glme_imp() and clmm_imp()) take a standard two-sided formula object,

where an intercept is added automatically.

For the use of the argument random, see Section 6.5.3.

Survival models expect the left hand side of formula to be a survival object

(created with the function Surv() from package survival;

see Section 6.5.4).

The argument family enables the choice of a distribution and link function

from a range of options when using glm_imp() or glme_imp(). The implemented

options are given in Table 6.1.

| family | |

|---|---|

gaussian

|

with links: identity, log

|

binomial

|

with links: logit, probit, log, cloglog

|

Gamma

|

with links: identity, log

|

poisson

|

with links: log, identity

|

6.5.1 Interactions

In JointAI, interactions between any type of variables (observed, incomplete, time-varying) can be handled. When an incomplete variable is involved, the interaction term is re-calculated within each iteration of the MCMC sampling, using the imputed values from the current iteration. Interaction terms involving incomplete variables should hence not be pre-calculated as an additional variable since this would lead to inconsistent imputed values of main effect and interaction term.

Interactions between multiple variables can be specified using parentheses;

for higher lever interactions the "^" operator can be used:

6.5.2 Non-linear Functional Forms

In practice, associations between outcome and covariates do not always meet the standard assumption of linearity. Often, assuming a logarithmic, quadratic or other non-linear effect is more appropriate.

For completely observed covariates, JointAI can handle any common type of

function implemented in R, including splines, e.g., using

ns() or bs() from the package splines.

Since functions involving variables that have missing values need to be

re-calculated in each iteration of the MCMC sampling, currently, only functions

that are available in JAGS can be used for incomplete variables.

Those functions include:

log(),exp()sqrt(), polynomials (usingI())abs()sin(),cos()- algebraic operations involving one or multiple (in)complete variables, as long as the formula can be interpreted by JAGS.

The list of functions implemented in JAGS can be found in the JAGS user manual available at https://sourceforge.net/projects/mcmc-jags/files/Manuals/.

Some examples (that do not necessarily have a meaningful interpretation or good model fit) are:

library("splines")

mod2b <- lm_imp(SBP ~ ns(age, df = 2) + gender + I(bili^2) + I(bili^3),

data = NHANES)mod2c <- lm_imp(SBP ~ age + gender + I(creat/albu^2), data = NHANES,

trunc = list(albu = c(1e-5, 1e5)))

# (for explantion of the argument trunc see section below)It is also possible to nest a function in another function.

6.5.2.1 Functions with Restricted Support

When a function of an incomplete variable has restricted support,

e.g., \(\log(x)\) is only defined for \(x > 0\),

or, as in mod2c from above, I(creat/albu^2) can not be calculated for

albu = 0,

the distribution used to impute that variable needs to comply

with these restrictions.

This can either be achieved by truncating the distribution, using the

argument trunc, or by selecting a distribution that meets the restrictions.

Example:

When using a \(\log\) transformation for the covariate bili, we can

use the default imputation method "norm" (a normal distribution) and truncate

it by specifying trunc = list(bili = c(lower, upper)), where lower and

upper are the smallest and largest values allowed:

mod3a <- lm_imp(SBP ~ age + gender + log(bili) + exp(creat),

trunc = list(bili = c(1e-5, 1e10)), data = NHANES)Truncation always requires the specification of both limits. Since \(-\text{Inf}\) and \(\text{Inf}\) are not accepted, a value far enough outside the range of the variable (here: \(1e10\)) can be selected for the second boundary of the truncation interval.

Alternatively, we may choose a model for the incomplete variable (using the argument models;

for more details see Section 6.5.5)

that only imputes positive values such as a

log-normal distribution ("lognorm") or a gamma distribution ("gamma"):

Functions Not Available in R

It is possible to use functions that have different names in R and JAGS, or that do exist in JAGS, but not in R, by defining a new function in R that has the name of the function in JAGS.

Example:

In JAGS the inverse logit transformation is defined in the function

ilogit. In base R, there is no function ilogit, but the inverse logit

is available as the distribution function of the logistic distribution plogis().

Thus, we can define the function ilogit() as

and use it in the model formula

A Note on What Happens Inside JointAI

When a function of a complete or incomplete variable is used in the model formula, the main effect of that variable is automatically added as an auxiliary variable (more on auxiliary variables in Section 6.5.6), and only the main effects are used as predictors in the imputation models.

In mod2b, for example, the spline of age is used as predictor for SBP,

but in the imputation model for bili, age enters with a linear effect.

This can be checked using the function

list_models(),

which prints a list of the covariate models used in a JointAI model.

Here, we are only interested in the predictor variables, and, hence,

suppress printing of information on prior distributions, regression coefficients

and other parameters by setting priors, regcoef and otherpars to FALSE:

## Normal imputation model for 'bili'

## * Predictor variables:

## (Intercept), genderfemale, ageWhen a function of a variable is specified as an auxiliary variable,

this function is used in the imputation models.

For example, in mod4b, waist circumference (WC)

is not part of the model for SBP, and the quadratic term I(WC^2) is used in

the linear predictor of the imputation model for bili:

mod4b <- lm_imp(SBP ~ age + gender + bili, auxvars = "I(WC^2)",

data = NHANES)

list_models(mod4b, priors = FALSE, regcoef = FALSE, otherpars = FALSE)## Normal imputation model for 'WC'

## * Predictor variables:

## (Intercept), age, genderfemale

##

## Normal imputation model for 'bili'

## * Predictor variables:

## (Intercept), age, genderfemale, I(WC^2)Incomplete variables are always imputed on their original scale, i.e.,

in mod2b the variable bili is imputed and the quadratic and cubic versions

are calculated from the imputed values.

Likewise, creat and albu in mod2c

are imputed separately, and I(creat/albu^2) is calculated from the imputed (and

observed) values.

To ensure consistency between variables, functions involving incomplete

variables should be specified as part of the model formula and not be

pre-calculated as separate variables.

6.5.3 Multi-level Structure and Longitudinal Covariates

In multi-level models, additional to the fixed effects structure specified by

the argument fixed, a random effects structure needs to be provided via the

argument random.

Analogous to the specification of the argument random in lme(), it

takes a one-sided formula starting with a "~", and the grouping

variable is separated by "|". A random intercept is added automatically and

only needs to be specified in a random intercept only model.

A few examples:

random = ~1 | id: random intercept only, withidas grouping variablerandom = ~time | id: random intercept and slope for variabletimerandom = ~time + I(time^2) | id: random intercept, slope and quadratic random effect fortimerandom = ~time + x | idrandom intercept, random slope fortimeand random effect for variablex

It is possible to use splines in the random effects structure if there are no missing values in the variables involved, e.g.:

mod5 <- lme_imp(bmi ~ GESTBIR + ETHN + HEIGHT_M + ns(age, df = 2),

random = ~ ns(age, df = 2) | ID, data = simLong)Longitudinal (“time-varying”; level-1) covariates can be used in the fixed or random effects and will be imputed if they contain any missing values. Currently only one level of nesting is possible.

6.5.4 Survival Models

JointAI provides two functions to analyse survival data with incomplete

covariates: survreg_imp() and coxph_imp(). Analogous to the complete data

versions of these functions from the package survival, the left-hand side

of the model formula has to be a survival object specified using the function

Surv().

Example:

To analyse the lung data, we can either use a parametric Weibull model

or a Cox proportional hazards model. The survival package needs to be

loaded for the function Surv().

library(survival)

mod6a <- survreg_imp(Surv(time, status) ~ age + sex + ph.karno +

meal.cal + wt.loss, data = lung, n.iter = 250)

mod6b <- coxph_imp(Surv(time, status) ~ age + sex + ph.karno + meal.cal +

wt.loss, data = lung, n.iter = 250)Currently only right censored survival data and time-constant covariates can be handled and it is not yet possible to take into account strata, clustering or frailty terms.

6.5.5 Imputation / Covariate Model Types

JointAI automatically selects an (imputation) model for each of the

incomplete baseline (level-2) or longitudinal (level-1) covariates,

based on the class of the variable and the number of levels.

The automatically selected types for baseline covariates are:

norm: linear model (default for continuous variables)logit: binary logistic model (default for factors with two levels)multilogit: multinomial logit model (default for unordered factors with \(>2\) levels)cumlogit: cumulative logit model (default for ordered factors with \(>2\) levels)

The default methods for longitudinal covariates are:

lmm: linear mixed model (default for continuous longitudinal variables)glmm_logit: logistic mixed model (default for longitudinal factors with two levels)clmm: cumulative logit mixed model (default for longitudinal ordered factors with >2 levels)

When a continuous variable has only two different values,

it is automatically converted to a factor and imputed using a logistic model,

unless an imputation model type is specified by the user. Variables of type

logical are automatically converted to binary factors.

The imputation models that are chosen by default may not necessarily be appropriate for the data at hand, especially for continuous variables, which often do not comply with the assumptions of (conditional) normality.

Therefore, the following alternative imputation methods are available for continuous baseline covariates:

lognorm: normal model for the log-transformed variable (right-skewed variables \(>0\))gamma: gamma model with log-link (right-skewed variables \(>0\))beta: beta model with logit-link (continuous variables in \([0, 1]\))

lognorm assumes a (conditional) normal distribution for the natural logarithm

of the variable, but the variable enters the linear predictor of the analysis

model (and possibly other imputation models) on its original scale.

For longitudinal covariates the following alternative model types are available:

glmm_gamma: gamma mixed model with log-link (right-skewed variables \(>0\))glmm_poisson: Poisson mixed model with log-link (count variables)

Specification of Imputation/Covariate Model Types

In models mod3b and mod3c in Section 6.5.2.1 we have already

seen two examples in which the imputation model type was changed using the

argument models, which takes a named vector.

When the vector supplied to models only contains specifications for a subset

of the incomplete and longitudinal covariates, default models

are used for the remaining covariates.

As explained in Section 6.2.2, models for completely observed

longitudinal covariates only need to be specified when any baseline

covariates have missing values.

mod7a <- lm_imp(SBP ~ age + gender + WC + alc + bili + occup + smoke,

models = c(WC = 'gamma', bili = 'lognorm'),

data = NHANES, n.iter = 100)

mod7a$models## WC smoke bili occup alc

## "gamma" "cumlogit" "lognorm" "multilogit" "logit"The function get_models(), which finds and assigns the default imputation

methods, can be called directly.

get_models() has arguments

fixed: the fixed effects formularandom: the random effects formula (if necessary)data: the datasetauxvars: an optional vector of auxiliary variablesno_model: an optional vector of names of covariates for which no model will be specified

mod7b_models <- get_models(bmi ~ GESTBIR + ETHN + HEIGHT_M + SMOKE + hc +

MARITAL + ns(age, df = 2),

random = ~ ns(age, df = 2) | ID,

data = simLong)

mod7b_models## $models

## HEIGHT_M ETHN MARITAL SMOKE age

## "norm" "logit" "multilogit" "cumlogit" "lmm"

## hc

## "lmm"

##

## $meth

## HEIGHT_M ETHN MARITAL SMOKE hc

## "norm" "logit" "multilogit" "cumlogit" "lmm"get_models() returns a list of two vectors:

models: containing all specified modelsmeth: containing the models for the incomplete variables only

When there is a “time” variable in the model, such as age in our example

(which is the age of the child at the time of the measurement),

it may not be meaningful to specify a model for that variable.

Especially when the “time” variable is pre-specified by the design of the study,

it can usually be assumed to be independent of baseline covariates and a model for it

has no useful interpretation.

The argument no_model (in get_models() and in the main

functions *_imp()) allows for the exclusion of models for such variables

(as long as they are completely observed):

mod7c_models <- get_models(bmi ~ GESTBIR + ETHN + HEIGHT_M + SMOKE + hc +

MARITAL + ns(age, df = 2),

random = ~ ns(age, df = 2) | ID,

data = simLong, no_model = "age")

# mod7c_modelsBy excluding the model for age we implicitly assume that incomplete baseline

variables are independent of age.

Order of the Sequence of Imputation Models

By default, models for level-1 covariates are specified after models for level-2 covariates, so that the level-2 covariates are used as predictor variables in the models for level-1 covariates but not vice versa. Within the two groups, models are ordered by the number of missing values (decreasing), so that the model for the variable with the most missing values has the most variables in its linear predictor.

6.5.6 Auxiliary Variables

Auxiliary variables are variables that are not part of the analysis model but should be considered as predictor variables in the imputation models because they can inform the imputation of unobserved values.

Good auxiliary variables are (Van Buuren 2012)

- associated with an incomplete variable of interest, or are

- associated with the missingness of that variable and

- do not have too many missing values themselves. Importantly, they should be observed for a large proportion of the cases that have a missing value in the variable to be imputed.

In *_imp(), auxiliary variables can be

specified with the argument auxvars, which is a vector containing the names

of the auxiliary variables.

Example:

We might consider the variables educ and smoke as predictors

for the imputation of occup:

mod8a <- lm_imp(SBP ~ gender + age + occup, auxvars = c('educ', 'smoke'),

data = NHANES, n.iter = 100)The variables educ and smoke are not included in the analysis model

(as can be seen when printing the posterior mean of the regression coefficients

of the analysis model with coef()):

## (Intercept) genderfemale age

## 105.5937694 -5.8192149 0.3781785

## occuplooking for work occupnot working

## 4.0294291 -0.7349338They are, however, used as predictors in the imputation for occup and

imputed themselves (if they have missing values):

## Cumulative logit imputation model for 'smoke'

## * Predictor variables:

## genderfemale, age, educhigh

##

## Multinomial logit imputation model for 'occup'

## * Predictor variables:

## (Intercept), genderfemale, age, educhigh, smokeformer, smokecurrentFunctions of Variables as Auxiliary Variables

As shown above in mod3e, it is possible to specify functions of

auxiliary variables. In that case, the auxiliary variable is not considered as

a linear effect but as specified by the function.

Note that omitting auxiliary variables from the analysis model implies that the outcome is independent of these variables, conditional on the other variables in the model. If this is not true, the model is misspecified which may lead to biased results (similar to leaving a confounding variable out of a model).

6.5.7 Reference Values for Categorical Covariates

In JointAI, dummy coding is used when categorical variables enter a linear predictor, i.e., a binary variable is created for each category, except the reference category. These binary variables have value one when that category was observed and zero otherwise. Contrary to the behaviour in base R, this coding is also used for ordered factors.

By default, the first category of a categorical variable (ordered or unordered)

is used as reference, however, this may not always allow the desired interpretation

of the regression coefficients. Moreover, when categories are unbalanced, setting

the largest group as reference may result in better mixing of the MCMC chains.

Therefore, JointAI allows specification of the reference category separately

for each variable, via the argument refcats.

Changes in refcats will not impact the imputation of the respective variable,

but change categories for which dummy variables are included in the linear

predictor of the analysis model or other covariate models.

Setting Reference Categories for All Variables

To specify the choice of reference category globally for all variables in the

model, refcats can be set as

refcats = "first"refcats = "last"refcats = "largest"

For example:

Setting Reference Categories for Individual Variables

Alternatively, refcats takes a named vector, in which the reference category

for each variable can be specified either by its number or its name, or one of

the three global types: "first", "last" or "largest".

For variables for which no reference category is specified in the list the

default is used.

mod9b <- lm_imp(SBP ~ gender + age + race + educ + occup + smoke,

refcats = list(occup = "not working", race = 3,

educ = "largest"), data = NHANES)To facilitate specification of the reference categories, the function

set_refcat()

can be used.

It prints the names of the categorical variables that are selected by

- a specified model formula and/or

- a vector of auxiliary variables, or

- a vector naming covariates,

or all categorical variables in the data if only the argument data is provided,

and asks a number of questions which the user needs to reply to via input of

a number.

##

## How do you want to specify the reference categories?

##

## 1: Use the first category for each variable.

## 2: Use the last category for each variabe.

## 3: Use the largest category for each variable.

## 4: Specify the reference categories individually.When option 4 is chosen, a choice is given for each categorical variable, for example:

#> The reference category for "race" should be

#>

#> 1: Mexican American

#> 2: Other Hispanic

#> 3: Non-Hispanic White

#> 4: Non-Hispanic Black

#> 5: otherAfter specification of the reference categories,

the determined specification for the argument refcats is printed:

#> In the JointAI model specify:

#> refcats = c(gender = 'female', race = 'Non-Hispanic White',

#> educ = 'low', occup = 'not working', smoke = 'never')

#>

#> or use the output of this function.set_refcat() also returns a named vector that can be passed to the argument

refcats:

6.5.8 Hyperparameters

In the Bayesian framework, parameters are random variables for which a distribution needs to be specified. These distributions depend on parameters themselves, i.e., on hyperparameters.

The function default_hyperpars() returns a list containing the default

hyperparameters used in a JointAI model (see Appendix 6.A).

To change the hyperparameters in a JointAI model, the list obtained from

default_hyperpars() can be edited and passed to the argument hyperpars

in *_imp().

mu_reg_* and tau_reg_* refer to the mean and precision of the prior distribution

for regression coefficients.

shape_tau_* and rate_tau_* are the shape and rate parameters of a gamma

distribution that is used as prior for precision parameters.

RinvD is the scale matrix in the Wishart prior for the inverse of the random

effects design matrix D, and KinvD is the number of degrees of freedom in that

distribution. shape_diag_RinvD and rate_diag_RinvD are the shape and rate parameters

of the gamma prior of the diagonal elements of RinvD.

In random effects models with only one random effect, a gamma prior is used

instead of the Wishart distribution for the inverse of D.

The hyperparameters mu_reg_surv and tau_reg_surv are used in

survreg_imp() as well as coxph_imp(). For the Cox proportional

hazards model, the hyperparameters c, r and eps refer to

the confidence in the prior guess for the hazard function, failure rate per unit time

(\(\lambda_0(t)^*\) in Section 6.2.1) and time increment

(for numerical stability), respectively.

6.5.9 Scaling

When variables are measured on very different scales this can result in slow convergence and bad mixing. Therefore, JointAI automatically scales continuous variables to have mean zero and standard deviation one. Results (parameters and imputed values) are transformed back to the original scale when the results are printed or imputed values are exported.

In some settings, however, it is not possible to scale continuous variables. This is the case for incomplete variables that enter a linear predictor in a function and variables that are imputed with models that are defined on a subset of the real line (i.e., with a gamma or a beta distribution). Variables that are imputed with a log-normal distribution are scaled, but not centred.

To prevent scaling, the argument scale_vars can be set to FALSE. When

a vector of variable names is supplied to scale_vars, only those variables

are scaled.

By default, only the MCMC samples that are scaled back to the scale of the data

are stored in a JointAI object.

When the argument keep_scaled_mcmc = TRUE, the scaled sample is also kept.

6.5.10 Ridge Regression

Using the argument ridge = TRUE it is possible to impose a ridge penalty

on the parameters of the analysis model, shrinking these parameters towards zero.

This is done by specification of a \(\text{Gamma}(0.01, 0.01)\) prior for the

precision of the regression coefficients \(\beta\) instead of setting it to a

fixed (small) value.

6.6 MCMC Settings

The functions *_imp() have a number of arguments that specify settings for

the MCMC sampling:

n.chains: number of MCMC chainsn.adapt: number of iterations in the adaptive phasen.iter: number of iterations in the sampling phasethin: thinning degreemonitor_params: parameters/nodes to be monitoredseed: optional seed value for reproducibilityinits: initial valuesparallel: whether MCMC chains should be sampled in parallelncores: how many cores are available for parallel sampling

The first four, as well as the following two parameters, are passed directly to functions from the R package rjags:

quiet: should printing of information be suppressed?progress.bar: type of progress bar ("text","gui"or"none")

In the following sections, the arguments listed above are explained in more detail and examples are given.

6.6.1 Number of Chains, Iterations and Samples

Number of Chains

To evaluate convergence of MCMC chains it is helpful to create multiple chains that have different starting values. More information on how to evaluate convergence and the specification of initial values can be found in Sections 6.7.3.1 and 6.6.3 respectively.

The argument n.chains selects the number of chains (by default, n.chains = 3).

For calculating the model summary, multiple chains are merged.

Adaptive Phase

JAGS has an adaptive mode, in which samplers are optimized (for example the

step size is adjusted).

Samples obtained during the adaptive mode do not form a Markov chain and are

discarded.

The argument n.adapt controls the length of this adaptive phase.

The default value for n.adapt is 100, which works well in many of the examples

considered here. Complex models may require longer adaptive phases. If the adaptive

phase is not sufficient for JAGS to optimize the samplers, a warning message will be

printed (see example below).

Sampling Iterations

n.iter specifies the number of iterations in the sampling phase, i.e., the length

of the MCMC chain. How many samples are required to reach convergence and to

have sufficient precision (see also Section 6.7.3.2)

depends on the complexity of data and model, and may

range from as few as 100 to several million.

Thinning

In settings with high autocorrelation, it may take many iterations before a

sample is created that sufficiently

represents the whole range of the posterior distribution.

Processing of such long chains can be slow and cause memory issues.

The parameter thin allows the user to specify if and how much the MCMC chains should

be thinned out before storing them. By default thin = 1 is used,

which corresponds to keeping all values. A value thin = 10 would result in

keeping every 10th value and discarding all other values.

6.6.1.1 Example: Default Settings

Using the default settings n.adapt = 100 and thin = 1, and 100 sampling

iterations, a simple model would be

The relevant part of the model summary (obtained with summary())

shows that the first 100 iterations

(adaptive phase) were discarded, the 100 iterations that follow form the

posterior sample, thinning was set to “1” and there are three chains.

## [...]

## MCMC settings:

## Iterations = 101:200

## Sample size per chain = 100

## Thinning interval = 1

## Number of chains = 3Example: Insufficient Adaptation Phase

## Warning in rjags::jags.model(file = modelfile, data = data_list, inits

## = inits, : Adaptation incomplete

## NOTE: Stopping adaptationSpecifying n.adapt = 10 results in a warning message. The relevant part of the

model summary from the resulting model is:

## [...]

## Iterations = 11:110

## Sample size per chain = 100

## Thinning interval = 1

## Number of chains = 3Example: Thinning

Here, iterations 110 until 600 are used in the output, but due to a thinning interval of ten, the resulting MCMC chains contain only 50 samples instead of 500, that is, the samples from iteration 110, 120, 130,

## [...]

## Iterations = 110:600

## Sample size per chain = 50

## Thinning interval = 10

## Number of chains = 36.6.2 Parameters to Follow

Since JointAI uses JAGS (Plummer 2003) for performing the MCMC sampling, and JAGS only saves the values of MCMC chains for those nodes which the user has specified should be monitored, this is also the case in JointAI.

For this purpose, the main functions *_imp() have an argument

monitor_params, which takes a named list (or a named vector) with

possible entries given in Table 6.2.

This table contains a number of keywords that refer to (groups of) nodes.

Each of the keywords works as a switch and can be specified as TRUE or FALSE

(with the exception of other).

| name/keyword | what is monitored |

|---|---|

analysis_main

|

betas and sigma_y (and D)

|

betas

|

regression coefficients of the analysis model |

tau_y

|

precision of the residuals from the analysis model |

sigma_y

|

std. deviation of the residuals from the analysis model |

analysis_random

|

ranef, D, invD, RinvD

|

ranef

|

random effects |

D

|

covariance matrix of the random effects |

invD

|

inverse of D

|

RinvD

|

scale matrix in Wishart prior for invD

|

imp_pars

|

alphas, tau_imp, gamma_imp, delta_imp

|

alphas

|

regression coefficients in the imputation models |

tau_imp

|

precision of the residuals from imputation models |

gamma_imp

|

intercepts in ordinal imputation models |

delta_imp

|

increments of ordinal intercepts |

imps

|

imputed values |

other

|

additional parameters |

Parameters of the Analysis Model

The default setting is monitor_params = c(analysis_main = TRUE), i.e.,

only the main parameters of the analysis model are monitored, and

monitoring is switched off for all other parameters.

Main parameters are the regression coefficients of the analysis model

(beta), depending on the model type, the residual standard deviation (sigma_y),

and, for mixed models, the random effects variance-covariance matrix D.

The function parameters() returns the parameters specified to be followed

(also for models where no MCMC sampling was performed, i.e., when n.iter = 0 and

n.adapt = 0). We use it here to demonstrate the effect that different choices

for monitor_params have.

For example:

## [1] "(Intercept)" "genderfemale" "WC" "alc>=1"

## [5] "creat" "sigma_SBP"Parameters of the Covariate Models and Imputed Values

The parameters of the models for the incomplete variables can be selected with

monitor_params = c(imp_pars = TRUE). This will set monitors for the

regression coefficients (alpha) and other parameters, such as precision

(tau_*) and intercepts and increments (gamma_* and delta_*) in cumulative

logit models.

mod11b <- lm_imp(SBP ~ age + WC + alc + smoke + occup,

data = NHANES, n.adapt = 0,

monitor_params = c(imp_pars = TRUE,

analysis_main = FALSE))

parameters(mod11b)## [1] "alpha" "tau_WC" "gamma_smoke" "delta_smoke"To generate (multiple) imputed datasets to be used for further analyses,

the imputed values need to be monitored. This can be achieved by setting

monitor_params = c(imps = TRUE).

mod11c <- lm_imp(SBP ~ age + WC + alc + smoke + occup,

data = NHANES, n.adapt = 0,

monitor_params = c(imps = TRUE, analysis_main = FALSE))Extraction of multiple imputed datasets from a JointAI model is described in Section 6.7.6.

Random Effects

For mixed models, analysis_main also includes the random effects variance-covariance

matrix D.

Setting analysis_random = TRUE will switch on monitoring

for the random effects (ranef), random effects variance-covariance matrix (D),

inverse of the random effects variance-covariance matrix (invD) and the diagonal of the

scale matrix of the Wishart-prior of invD (RinvD).

mod11d <- lme_imp(bmi ~ age + EDUC, random = ~age | ID,

data = simLong, n.adapt = 0,

monitor_params = c(analysis_random = TRUE))

parameters(mod11d)## [1] "(Intercept)" "EDUCmid" "EDUClow" "age" "sigma_bmi"

## [6] "b" "invD[1,1]" "invD[1,2]" "invD[2,2]" "D[1,1]"

## [11] "D[1,2]" "D[2,2]" "RinvD[1,1]" "RinvD[2,2]"It is possible to select only a subset of the random effects parameters by specifying them directly, e.g.,

mod11e <- lme_imp(bmi ~ age + EDUC, random = ~age | ID,

data = simLong, n.adapt = 0,

monitor_params = c(analysis_main = TRUE, RinvD = TRUE))

parameters(mod11e)## [1] "(Intercept)" "EDUCmid" "EDUClow" "age" "sigma_bmi"

## [6] "D[1,1]" "D[1,2]" "D[2,2]" "RinvD[1,1]" "RinvD[2,2]"or by switching unwanted parts of analysis_random off, e.g.,

mod11f <- lme_imp(bmi ~ age + EDUC, random = ~age | ID, data = simLong,

n.adapt = 0, monitor_params = c(analysis_main = TRUE,

analysis_random = TRUE,

RinvD = FALSE,

ranef = FALSE))

parameters(mod11f)## [1] "(Intercept)" "EDUCmid" "EDUClow" "age" "sigma_bmi"

## [6] "invD[1,1]" "invD[1,2]" "invD[2,2]" "D[1,1]" "D[1,2]"

## [11] "D[2,2]"Other Parameters

The element other in monitor_params allows for the specification of one or multiple

additional parameters to be monitored. When other is used with more than one

element, monitor_params has to be a list.

Here, as an example, we monitor the probability of being in the alc>=1 group for

subjects one through three and the expected value of the distribution of creat for

the first subject.

mod11g <- lm_imp(SBP ~ gender + WC + alc + creat, data = NHANES,

n.adapt = 0,

monitor_params = list(analysis_main = FALSE,

other = c('p_alc[1:3]',

"mu_creat[1]")))

parameters(mod11g)## [1] "p_alc[1:3]" "mu_creat[1]"Even though this example may not be particularly meaningful, in cases of convergence issues it can be helpful to be able to monitor any node of the model, not just the ones that are typically of interest.

6.6.3 Initial Values

Initial values are the starting point for the MCMC sampler. Setting good

initial values, i.e., values that are likely under the posterior distribution,

can speed up convergence.

By default, the argument inits = NULL, which means that initial values are

generated automatically by JAGS.

It is also possible to supply initial values directly as a list or as a function.

Initial values can be specified for every unobserved node, that is, parameters and missing values, but it is possible to only specify initial values for a subset of nodes.

When the initial values provided by the user do not have elements named

".RNG.name" or ".RNG.seed", JointAI will add those elements,

which specify the name and seed value of the random number generator used

for each chain.

The argument seed allows the specification of a seed value with which the

starting values of the random number generator, and, hence, the values of the MCMC

sample, can be reproduced.

Initial Values in a List of Lists

A list containing initial values should have the same length as the number of chains, where each element is a named list of initial values. Moreover, initial values should differ between the chains.

For example, to create initial values for the parameter vector beta and

the precision parameter tau_SBP for two chains the following syntax could

be used:

init_list <- lapply(1:2, function(i) {

list(beta = rnorm(4),

tau_SBP = rgamma(1, 1, 1))

})

init_list## [[1]]

## [[1]]$beta

## [1] 0.2995096 0.2123710 0.6478957 0.8952516

##

## [[1]]$tau_SBP

## [1] 1.000624

##

##

## [[2]]

## [[2]]$beta

## [1] 2.2559981 0.9786635 -1.2725176 -0.7251253

##

## [[2]]$tau_SBP

## [1] 0.05501739The user provided lists of initial values are stored in the JointAI object

(together with starting values for the random number generator)

and can be accessed via mod11a$mcmc_settings$inits.

Initial Values as a Function

Initial values can be specified as a function. The function should either take no

arguments or a single argument called chain, and return a named list that

supplies values for one chain.

For example, to create initial values for the parameter vectors beta and alpha:

## $beta

## [1] -1.6045542 0.1872611 1.0167161 -0.4272887

##

## $alpha

## [1] 0.8542140 0.6391477 0.4720952mod12b <- lm_imp(SBP ~ gender + age + WC, data = NHANES,

inits = inits_fun)

mod12b$mcmc_settings$inits## [[1]]

## [[1]]$beta

## [1] -0.07058338 0.41772091 -1.66236440 1.24957652

##

## [[1]]$alpha

## [1] -0.7204577 0.1424769 -1.0114044

##

## [[1]]$.RNG.name

## [1] "base::Wichmann-Hill"

##

## [[1]]$.RNG.seed

## [1] 77704

##

##

## [[2]]

## [[2]]$beta

## [1] -0.50236788 -0.01997157 1.40425944 1.18807193

##

## [[2]]$alpha

## [1] -0.8065902 -0.9709539 -0.7020397

##

## [[2]]$.RNG.name

## [1] "base::Mersenne-Twister"

##

## [[2]]$.RNG.seed

## [1] 29379

##

##

## [[3]]

## [[3]]$beta

## [1] -0.3516978 -0.9144069 -1.7397631 -0.1083395

##

## [[3]]$alpha

## [1] 0.9067457 -0.4471829 0.1170837

##

## [[3]]$.RNG.name

## [1] "base::Mersenne-Twister"

##

## [[3]]$.RNG.seed

## [1] 83619When a function is supplied, the function is evaluated by JointAI and the

resulting list is stored in the JointAI object.

6.6.3.1 For which Nodes can Initial Values be Specified?

Initial values can be specified for all unobserved stochastic nodes, i.e.,

parameters or unobserved data for which a distribution is specified in the

JAGS model. They have to be supplied in the format of the parameter or unobserved

value in the JAGS model.

To find out which nodes there are in a model and in which form they have to be

specified,

the function coef() from package rjags can be used to obtain a list

with the current values of the MCMC chains (by default the first chain)

from a JAGS model object. This object is contained in a JointAI

object under the name model.

Elements of the initial values should have the same structure as the elements in

this list of current values.

Example:

We are using a longitudinal model and the simLong data in this example.

Here we only show some relevant parts of the output.

mod12c <- lme_imp(bmi ~ time + HEIGHT_M + hc + SMOKE, random = ~ time | ID,

no_model = 'time', data = simLong)

# coef(mod12c$model)RinvD is the scale matrix in the Wishart prior for the inverse of the

random effects variance-covariance matrix D.

In the data that is passed to JAGS (which is stored in the element data_list

in a JointAI object), this matrix is specified as a diagonal matrix,

with unknown diagonal elements:

## $RinvD

## [,1] [,2]

## [1,] NA 0

## [2,] 0 NAThese diagonal elements are estimated in the model and have a gamma prior. The corresponding part of the JAGS model is:

## [...]

## # Priors for the covariance of the random effects

## for (k in 1:2){

## RinvD[k, k] ~ dgamma(shape_diag_RinvD, rate_diag_RinvD)

## }

## invD[1:2, 1:2] ~ dwish(RinvD[ , ], KinvD)

## D[1:2, 1:2] <- inverse(invD[ , ])

## [...]The element RinvD in the initial values has to be a \(2\times2\) matrix,

with positive values on the diagonal and NA as off-diagonal elements,

since these are fixed in the data:

## $RinvD

## [,1] [,2]

## [1,] 0.03648925 NA

## [2,] NA 0.07655993Lines 82 through 85 of the design matrix of the fixed effects of baseline

covariates, Xc, in the data are:

## (Intercept) HEIGHT_M SMOKEsmoked until[...] SMOKEcontin[...]

## 172.1 1 0.1148171 NA NA

## 173.1 1 NA NA NA

## 174.1 1 0.5045126 NA NA

## 175.1 1 1.8822249 NA NAThe matrix Xc in the initial values has the same dimension as Xc in the

data. It has values where there are missing values in Xc in the data,

e.g., Xc[83, 2], and is NA elsewhere:

## $Xc

## [,1] [,2] [,3] [,4]

## [1,] NA NA NA NA

##

## [...]

##

## [82,] NA NA NA NA

## [83,] NA 0.8028938 NA NA

## [84,] NA NA NA NA

##

## [...]There are no initial values specified for the third and fourth column, since

these columns contain the dummy variables corresponding to the categorical

variable SMOKE and are calculated from the corresponding column in the matrix Xcat,

i.e., these are deterministic nodes, not stochastic nodes.

The relevant part of the JAGS model is:

## [...]

## # ordinal model for SMOKE

## Xcat[i, 1] ~ dcat(p_SMOKE[i, 1:3])

## [...]

## Xc[i, 3] <- ifelse(Xcat[i, 1] == 2, 1, 0)

## Xc[i, 4] <- ifelse(Xcat[i, 1] == 3, 1, 0)

## [...]Elements that are completely unobserved, like the parameter vectors alpha

and beta, the random effects b or scalar parameters delta_SMOKE and

gamma_SMOKE are entirely specified in the initial values.

6.6.4 Parallel Sampling

To reduce the computational time it is possible to perform sampling of multiple

MCMC chains in parallel. This can be specified by setting

the argument parallel = TRUE. The maximum number of cores to be used can be

set with the argument ncores. By default this is two less than the number of

cores available on the machine, but never more than the number of MCMC chains.

Parallel computation is done using the packages foreach (Microsoft and Weston 2017) and doParallel (Corporation and Weston 2018). Note that it is currently not possible to display a progress bar when using parallel computation.

6.7 After Fitting

Any of the main functions *_imp() will return an object of class JointAI.

It contains the original data (data),

information on the type of model (call, analysis_type, models,

fixed, random, hyperpars, scale_pars)

and MCMC sampling (mcmc_settings),

the JAGS model (model) and MCMC sample (MCMC; if a sample was

generated), the computational time (time)

of the MCMC sampling, and some additional

elements that are used by methods for objects of class JointAI

but are typically not of interest for the user.

In the remaining part of this section, we describe how the results from a

JointAI model can be visualized, summarized and evaluated.

The functions described here use, by default, the full MCMC sample

and show only the parameters of the analysis model.

Arguments start, end and thin are available to select a subset of the MCMC

samples that is used to calculate the summary. The argument subset allows the user to control

for which nodes the summary or visualization is returned

and follows the same logic as

the argument monitor_params in *_imp().

The use of these arguments is further explained in Section 6.7.4.

6.7.1 Visualizing the Posterior Sample

The posterior sample can be visualized by two commonly used plots: a traceplot, showing samples across iterations, and a plot of the empirical density of the posterior sample.

Traceplot

A traceplot shows the sampled values per chain and node throughout

iterations. It allows the visual evaluation of convergence and mixing of the chains

and can be obtained with the function traceplot().

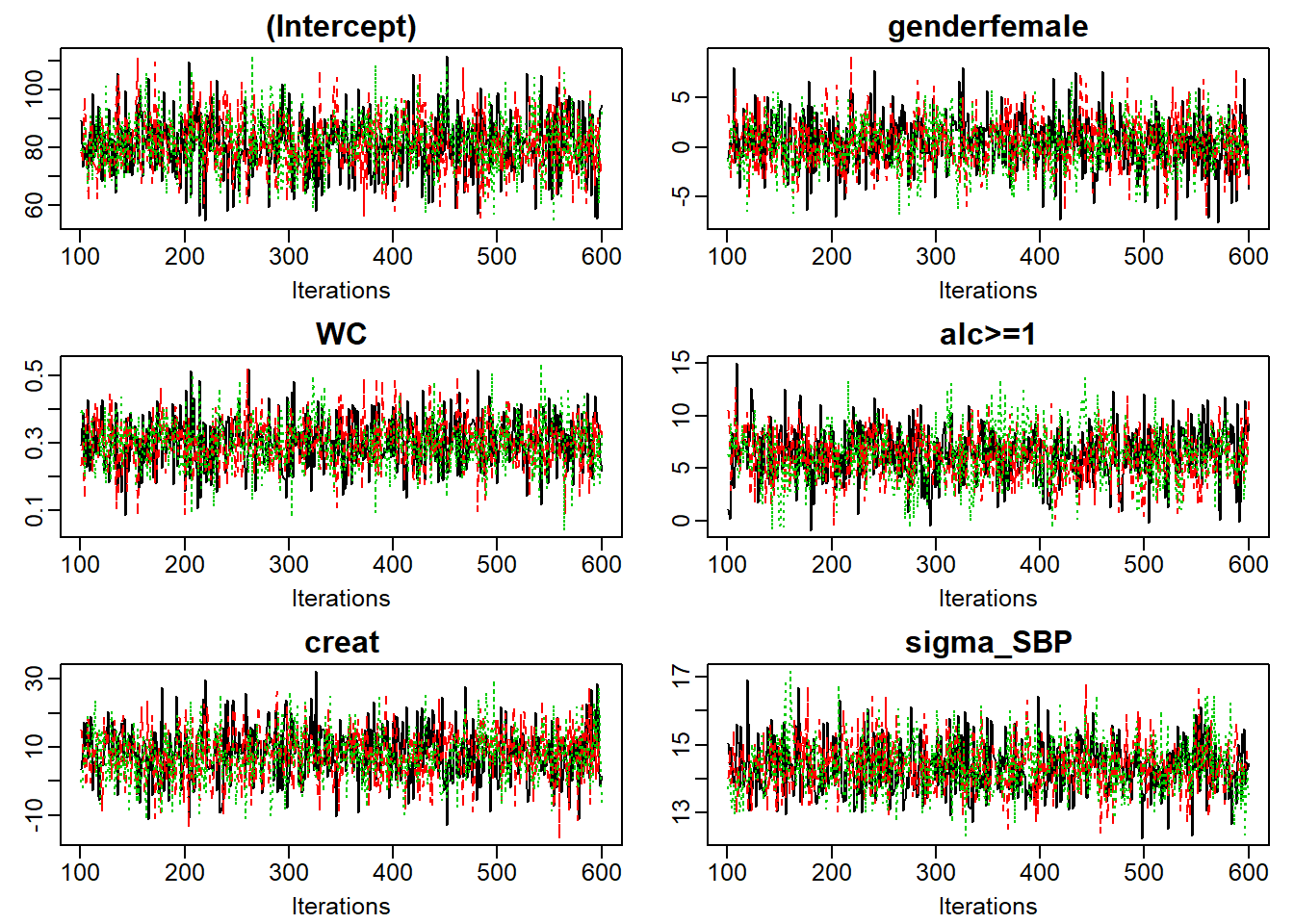

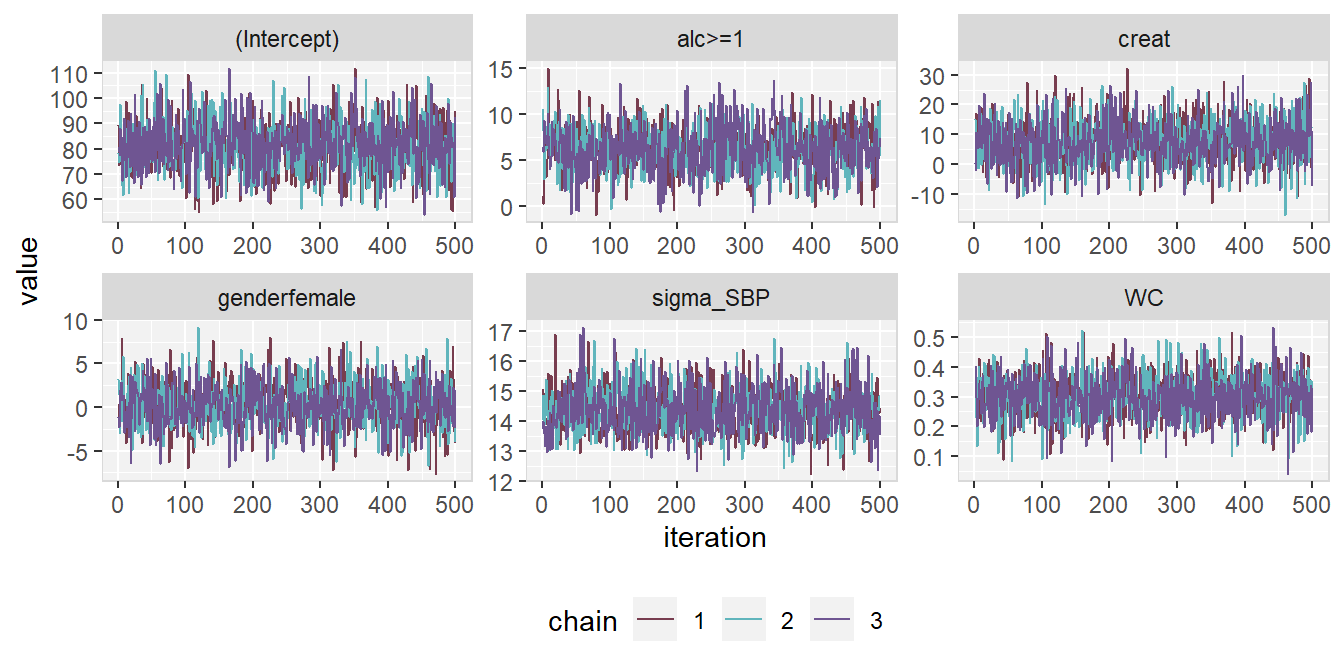

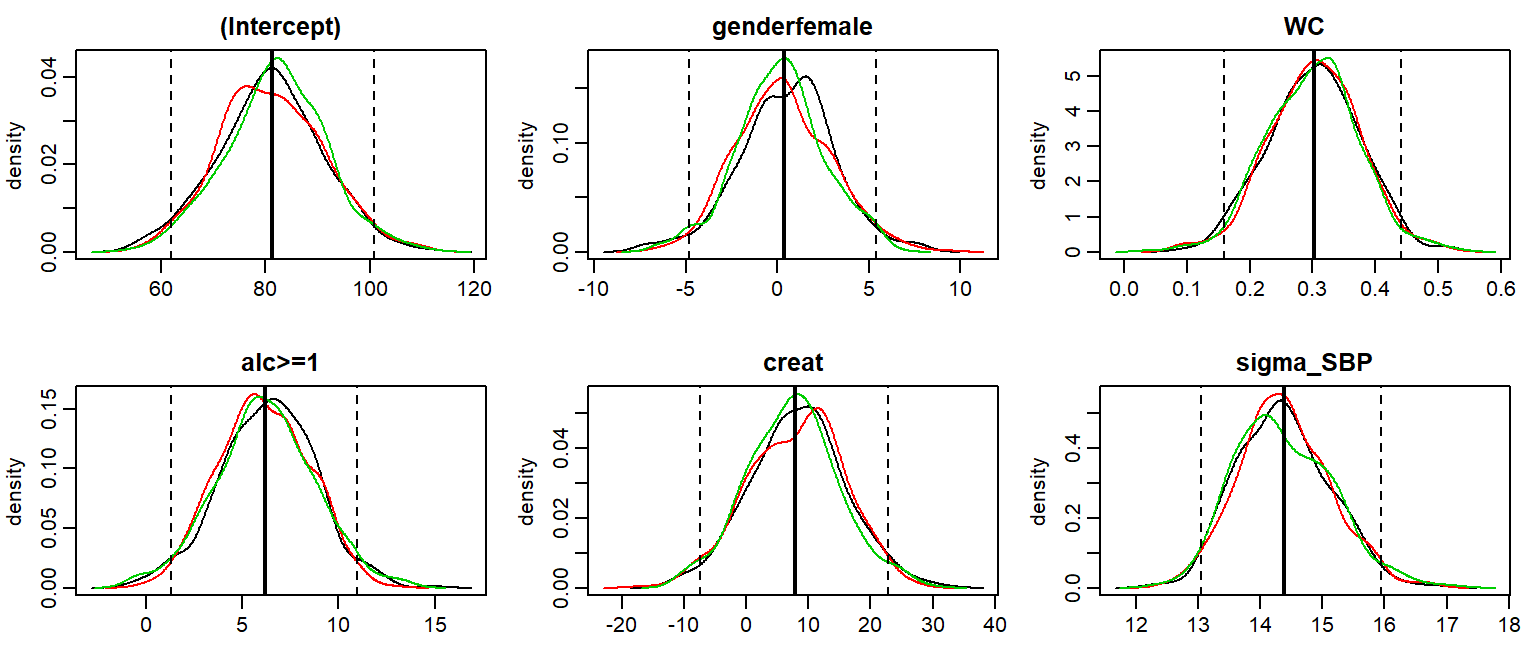

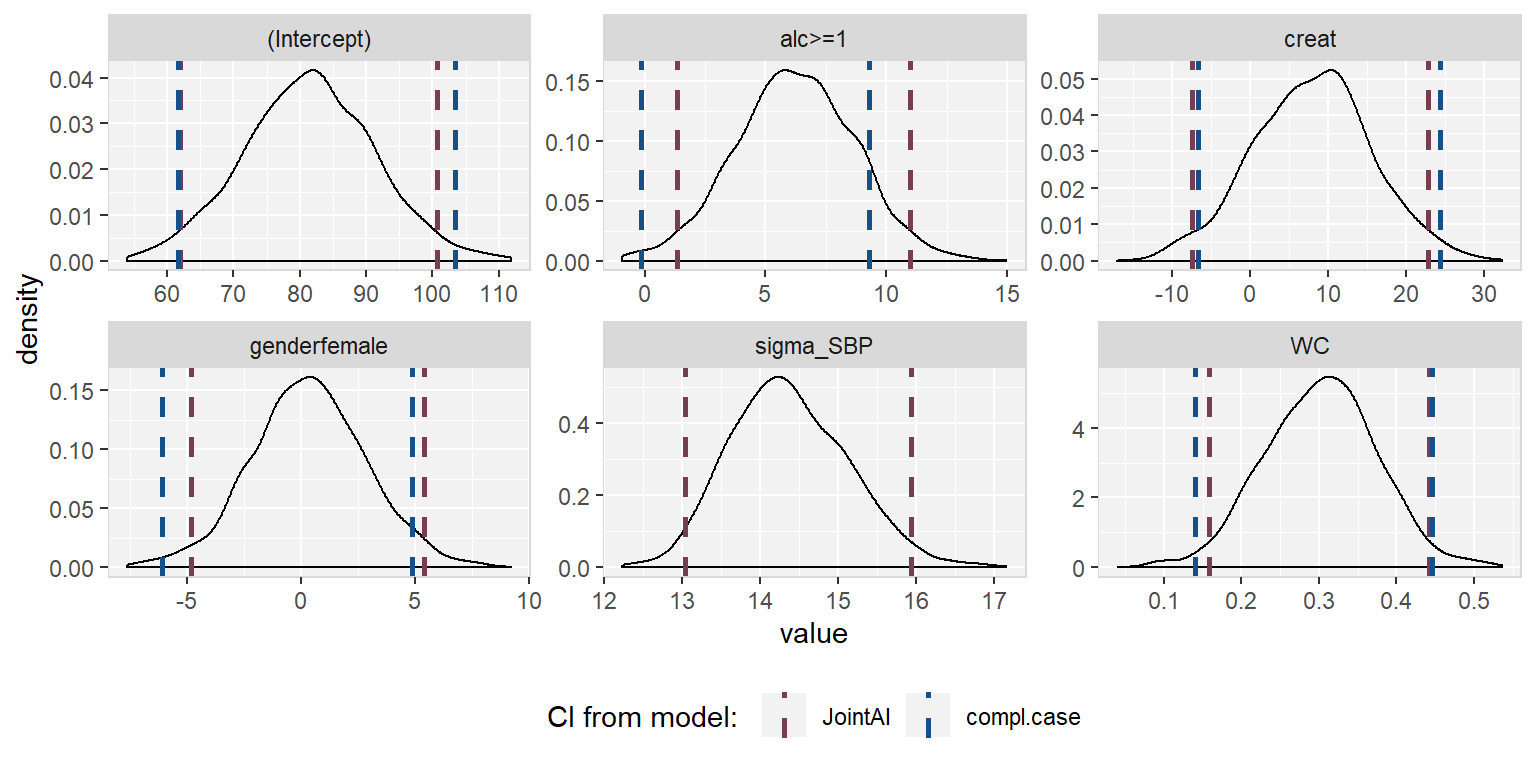

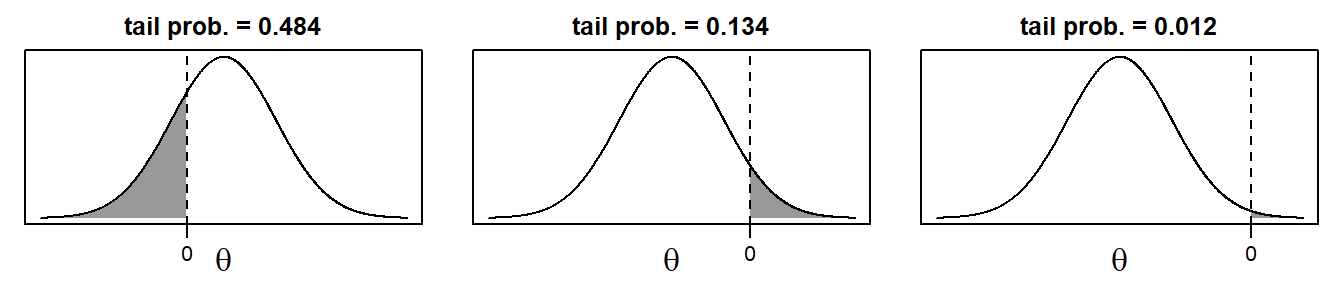

Figure 6.8: Traceplot of mod13a.

When the sampler has converged the chains show one horizontal band, as in

Figure 6.8.

Consequently, when traces show a trend, convergence has not been

reached and more iterations are necessary (e.g., using add_samples()).

Graphical aspects of the traceplot can be controlled by specifying

standard graphical arguments via the dots argument "...", which are passed to

matplot().

This allows the user to change colour, linetype and -width, limits, etc.

Arguments nrow and/or ncol can be supplied to set specific numbers of

rows and columns for the layout of the grid of plots.

With the argument use_ggplot it is possible to get a ggplot2 (Wickham 2016)

version of the traceplot. It can be extended using standard ggplot2 syntax.

The output of the following syntax is shown in Figure 6.9.

library(ggplot2)

traceplot(mod13a, ncol = 3, use_ggplot = TRUE) +

theme(legend.position = 'bottom',

panel.background = element_rect(fill = grey(0.95)),

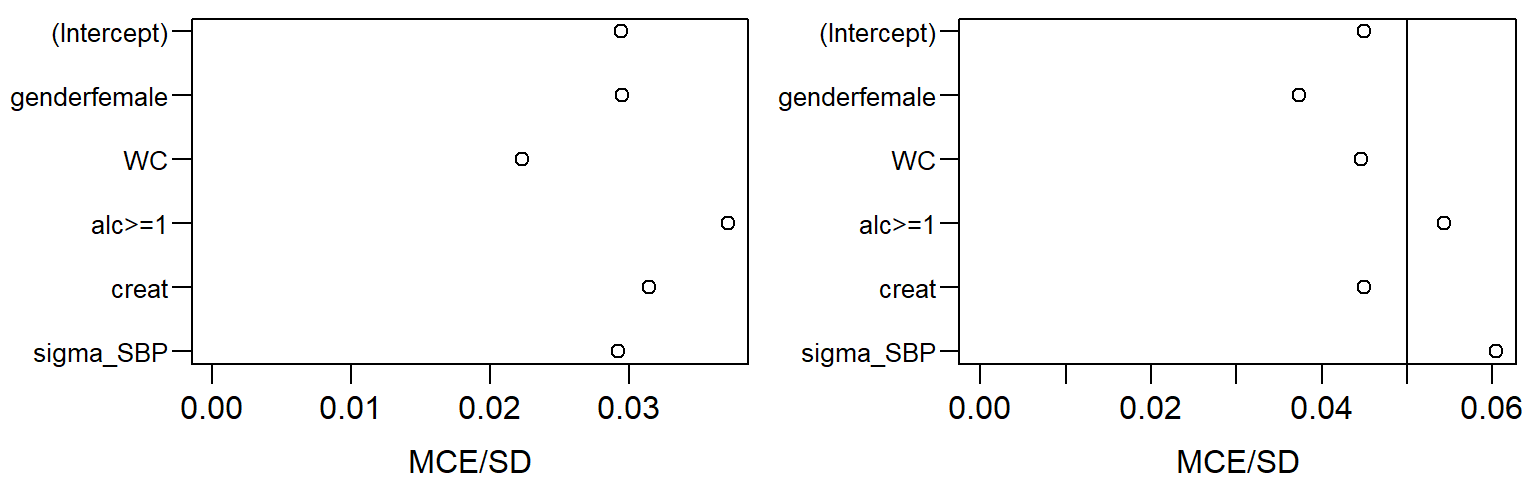

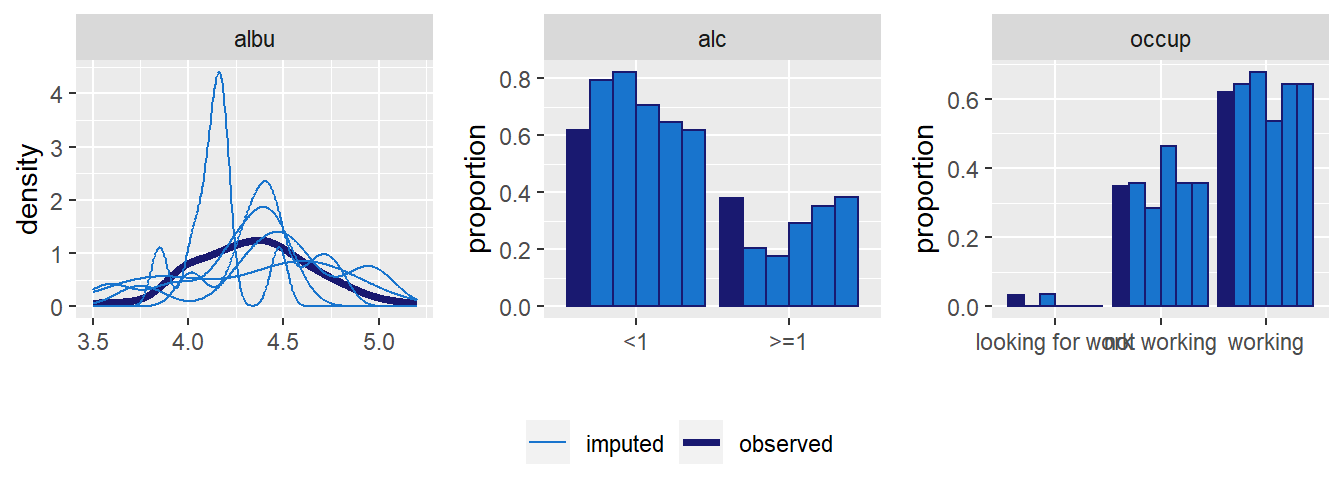

panel.border = element_rect(fill = NA, color = grey(0.85)),